Revenge Porn and Deep Fake Technology: The Latest Iteration of Online Abuse

Revenge Porn

The rise of the digital age has brought many advancements to our society. But it has also enabled new forms of online harassment and abuse. Revenge porn (otherwise referred to as image-based sexual abuse or nonconsensual pornography) is a type of gender-based abuse in which sexually explicit photos or videos are shared without the consent of those pictured. The prevalence of cell phones and user-generated content websites has turned revenge porn into a common phenomenon. While some legislation has been passed to meet this rising threat, technology has evolved to the point that many of these statutes no longer meet the challenge of the current environment. State legislators have left wide loopholes in their revenge porn statutes, and the rise in Artificial Intelligence (AI) has created a new brand of revenge porn missing entirely from state statutes. Furthermore, the federal government has so far failed to make revenge porn in any form a criminal offense.

As of late 2022, forty-eight states and D.C. have enacted some form of revenge porn legislation (the two states that have yet to enact formal revenge porn statutes are Massachusetts and South Carolina). Some of these statutes criminalize revenge porn, while others allow victims to recover monetary damages under existing civil causes of action. While it is encouraging to see so many states act, efforts at the federal level have repeatedly encountered hurdles. Each time, those efforts stalled due to First Amendment concerns. Representative Jackie Speier (D-CA) crafted the Ending Nonconsensual Online user Graphic Harassment (ENOUGH) Act in 2017 to make revenge porn a federal crime, but it died in committee and expired at the end of the 115th Congress. In 2018, Senator Ben Sasse (R-NE) introduced the Malicious Deep Fake Prohibition Act, criminalizing the creation or distribution of all fake electronic media records that appear realistic (essentially, banning deep fake technology altogether). The act expired at the end of 2018 with no cosponsors. In the past four years, Representative Yvette D. Clarke (D-NY) introduced the DEEP FAKES Accountability Act twice – the first in 2019 (H.R. 3230, which died in committee at the end of 2020) and the second in 2021 (H.R. 2395, which again died in committee at the beginning of 2023). In February 2023, Senators Amy Klobuchar (D-MN) and John Cornyn (R-TX) introduced to the Senate Judiciary Committee the Stopping Harmful Image Exploitation and Limiting Distribution (SHIELD) Act (utilizing text originally introduced by Rep. Jackie Speier). Though it should be noted that the proposed SHIELD Act would not criminalize the rising threat of AI-generated pornographic images.

As of late 2022, forty-eight states and D.C. have enacted some form of revenge porn legislation (the two states that have yet to enact formal revenge porn statutes are Massachusetts and South Carolina). Some of these statutes criminalize revenge porn, while others allow victims to recover monetary damages under existing civil causes of action. While it is encouraging to see so many states act, efforts at the federal level have repeatedly encountered hurdles. Each time, those efforts stalled due to First Amendment concerns. Representative Jackie Speier (D-CA) crafted the Ending Nonconsensual Online user Graphic Harassment (ENOUGH) Act in 2017 to make revenge porn a federal crime, but it died in committee and expired at the end of the 115th Congress. In 2018, Senator Ben Sasse (R-NE) introduced the Malicious Deep Fake Prohibition Act, criminalizing the creation or distribution of all fake electronic media records that appear realistic (essentially, banning deep fake technology altogether). The act expired at the end of 2018 with no cosponsors. In the past four years, Representative Yvette D. Clarke (D-NY) introduced the DEEP FAKES Accountability Act twice – the first in 2019 (H.R. 3230, which died in committee at the end of 2020) and the second in 2021 (H.R. 2395, which again died in committee at the beginning of 2023). In February 2023, Senators Amy Klobuchar (D-MN) and John Cornyn (R-TX) introduced to the Senate Judiciary Committee the Stopping Harmful Image Exploitation and Limiting Distribution (SHIELD) Act (utilizing text originally introduced by Rep. Jackie Speier). Though it should be noted that the proposed SHIELD Act would not criminalize the rising threat of AI-generated pornographic images.

Rise of Deep Fake Technology

What began as sharing consensually obtained images beyond their intended viewers has evolved into more insidious and complex crimes. Hackers have started accessing private devices to steal intimate photos for the purpose of blackmailing victims with the threat of sharing those photos online. And the rise of Artificial Intelligence (AI) has created a new type of revenge porn: deep fakes.

What began as sharing consensually obtained images beyond their intended viewers has evolved into more insidious and complex crimes. Hackers have started accessing private devices to steal intimate photos for the purpose of blackmailing victims with the threat of sharing those photos online. And the rise of Artificial Intelligence (AI) has created a new type of revenge porn: deep fakes.

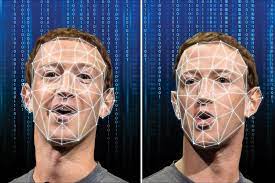

We have now entered a new phase of exploitation: AI-manufactured nude photos. Technology users can now utilize the photos of real people to create pornographic images and videos. There are two types of AI-generated images that relate to the problem of revenge porn: “deep fakes” and “nudified” images. Deep fakes are created when an AI program is “trained” on a reference subject. Uploaded reference photos and videos are “swapped” with target images, creating the illusion that the reference subject is saying or participating in actions that they never have. U.S. intelligence officials acknowledged deep fake technology in their annual “worldwide threat assessment” for its ability to create convincing (but false) images or videos that could influence political campaigns. In the context of revenge porn, pictures and videos of a victim can be manipulated into a convincing pornographic image or video. AI technology can also be used to “nudify” existing images. After uploading an image of a real person, a convincing nude photo can be generated using free applications and websites. While some of these apps have been banned or deleted (for example, DeepNude was shut down by its creator in 2019 after intense backlash), new apps pop up in their places.

This technology will undoubtedly exacerbate the prevalence and severity of revenge porn – with the line of what’s real and what’s generated blurring together, folks are more at risk of their image being exploited. And this technology is having a disproportionate impact on women. Sensity AI tracked online deep fake videos and found that 90%-95% of them are nonconsensual porn, and 90% of those are nonconsensual porn of women. This form of gender-based violence was recently on display when a high-profile male video game streamer accessed deep fake videos of his female colleagues and displayed them during a live stream.

This technology also creates a new legal problem: does a nude image have to be “real” for a victim to recover damages? In Ohio, for instance, it is a criminal offense to knowingly disseminate “an image of another person” who can “be identified form the image itself or from information displayed in connection with the image and the offender supplied the identifying information” when the person in the image is “in a state of nudity or is engaged in a sexual act.” It is currently unclear if an AI-generated nude image constitutes “an image of another person…” under the law. Cursory research did not unearth any lawsuits alleging the unauthorized use of personal images in AI-generated pornography. In fact, after becoming a victim to deep fake pornography herself, famed actress Scarlett Johansson told the Washington Post that she “thinks [litigation is] a useless pursuit, legally, mostly because the internet is a vast wormhole of darkness that eats itself.” However, in early 2023, artists have filed a class-action lawsuit against companies utilizing Stable Diffusion for copyright violations. This lawsuit may signal that unwilling participants in AI-generated content may could find relief in the legal system.

Some states have tackled head-on the issue of deep fake technology as it relates to revenge porn and sexual exploitation. In Virginia, it is a Class 1 misdemeanor for the unauthorized dissemination or selling of a sexually explicit video or image of another person created by any means whatsoever (emphasis added). The statute goes on to state that “another person” includes a person whose image was used in creating, adapting, or modifying a video or image with the intent to depict an actual person and who is recognizable as an actual person by the person’s face, likeness, or other distinguishing characteristic. But this Virginia law is not without fault. The statute states that the image must be of a person’s genitalia, pubic area, buttocks, or breasts. Therefore, an image of a person in a compromising position or that is merely sexually suggestive would likely not be covered by this statute.

Current Remedies are Insufficient

Revenge porn victims often bring tort claims, which may include invasion of privacy, intrusion on seclusion, intentional/negligent infliction of emotional distress, defamation, and others. Specific revenge porn statutes also allow for civil recovery in some states. But revenge porn statutes have flaws. In an article authored by Professor Rebecca Defino, there are three commonly cited critiques to revenge porn statutes. The first is that many of these statutes have a malicious intent or illicit motive requirement, which requires prosecutors to prove a particular mens rea. (921; see the aforementioned Ohio statute; Missouri criminal statute; Okla. Stat. tit. 21, § 1040.13b(B)(2)). Second, many revenge porn statutes include a “harm” requirement, which is difficult to prove and requires victims to expose even more of their private life in a public arena. (921). And finally, the penalties are weak. (921). And even if a victim wins a case against a perpetrator, jail time for the perpetrator or small monetary settlements don’t provide victims what they often really desire – for their images to be taken off the Internet. The Netflix series called The Most Hated Man on the Internet shows the years long and deeply expensive journey to remove photos from a revenge porn website. While state revenge porn laws may assist victims with finding recourse, the process of removing images post-conviction can still be traumatizing and time-consuming.

While deep fake legislation is considered (or stalled) through state and federal governments, the private sector may be able to provide some solutions. A tool called “Take It Down” is funded by Meta Platforms (the owner of Facebook and Instagram) and operated by the National Center for Missing and Exploited Children. The site allows anonymous individuals to create a digital “fingerprint” of real or deepfake image. That “fingerprint” is then uploaded to a database, which certain tech companies (including Facebook, Instagram, TikTok Yubo, OnlyFans, and Pornhub) have agreed to participate in, that will remove that image from their services. This technology is not without its own limitations. If the image is on a non-participating site (currently, Twitter has not committed to the project) or is sent via an encrypted platform (like WhatsApp), the image will not be taken down.

Additionally, if the image has been cropped, edited with a filter, turned into a meme, had an emoji added, or altered in other ways, the image is considered new and requires its own “fingerprint.”

While these are not easy problems to solve, the federal government can and should criminalize revenge porn, including AI-generated revenge porn. A federal statute would provide a stronger disincentive to create pornographic deep fakes through the threat of investigations by the FBI and prosecution by the Department of Justice. (927-928). Law professor Rebecca A. Delfino drafted the Pornographic Deepfake Criminalization Act, which makes the creation or distribution of pornographic deepfakes unlawful and allows the government to impose jail time and/or fines on defendants found guilty of the crime. (928-930). Perhaps more impactfully, the proposed act allows courts to issue the destruction of the image, compel content providers to remove the image, issue an injunction to prevent further distribution of the deep fake image, and award monetary damages to the victim. (930). The law is an imperfect tool in fighting against revenge porn and AI-generated pornographic deep fakes. But to the extent that legislators can provide additional support to victims, it is their obligation to do so.

Kara Kelleher graduated from Boston University School of Law with a juris doctor in May 2023.

Kara Kelleher graduated from Boston University School of Law with a juris doctor in May 2023.