Imaging in Scattering Media

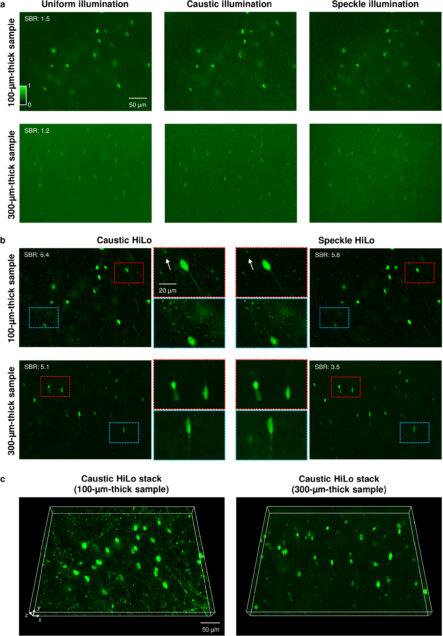

HiLo microscopy with caustic illumination

Guorong Hu, Joseph Greene, Jiabei Zhu, Qianwan Yang, Shuqi Zheng, Yunzhe Li, Jeffrey Alido, Ruipeng Guo, Jerome Mertz, and Lei Tian

Biomedical Optics Express Vol. 15, Issue 7, pp. 4101-4110 (2024).

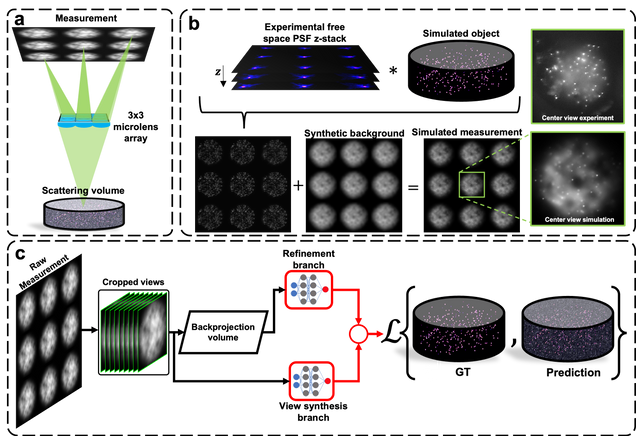

Robust single-shot 3D fluorescence imaging in scattering media with a simulator-trained neural network

J. Alido, J. Greene, Y. Xue, G. Hu, Y. Li, K. Monk, B. DeBenedicts, I. Davison, L. Tian

Optics Express Vol. 32, Issue 4, pp. 6241-6257 (2024).

⭑ Github Project

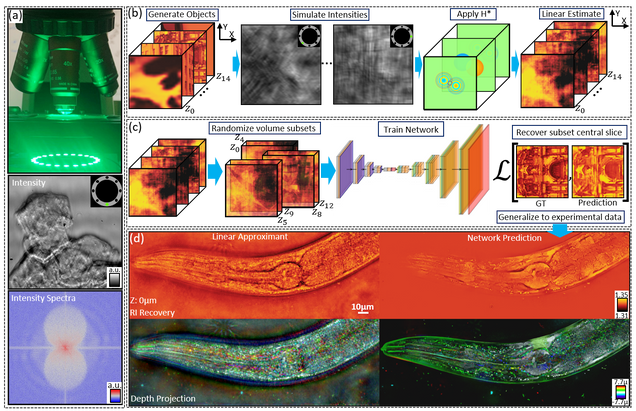

Multiple-scattering simulator-trained neural network for intensity diffraction tomography

A. Matlock, J. Zhu, L. Tian

Optics Express 31, 4094-4107 (2023)

Recovering 3D phase features of complex biological samples traditionally sacrifices computational efficiency and processing time for physical model accuracy and reconstruction quality. Here, we overcome this challenge using an approximant-guided deep learning framework in a high-speed intensity diffraction tomography system. Applying a physics model simulator-based learning strategy trained entirely on natural image datasets, we show our network can robustly reconstruct complex 3D biological samples. To achieve highly efficient training and prediction, we implement a lightweight 2D network structure that utilizes a multi-channel input for encoding the axial information. We demonstrate this framework on experimental measurements of weakly scattering epithelial buccal cells and strongly scattering C. elegans worms. We benchmark the network’s performance against a state-of-the-art multiple-scattering model-based iterative reconstruction algorithm. We highlight the network’s robustness by reconstructing dynamic samples from a living worm video. We further emphasize the network’s generalization capabilities by recovering algae samples imaged from different experimental setups. To assess the prediction quality, we develop a quantitative evaluation metric to show that our predictions are consistent with both multiple-scattering physics and experimental measurements.

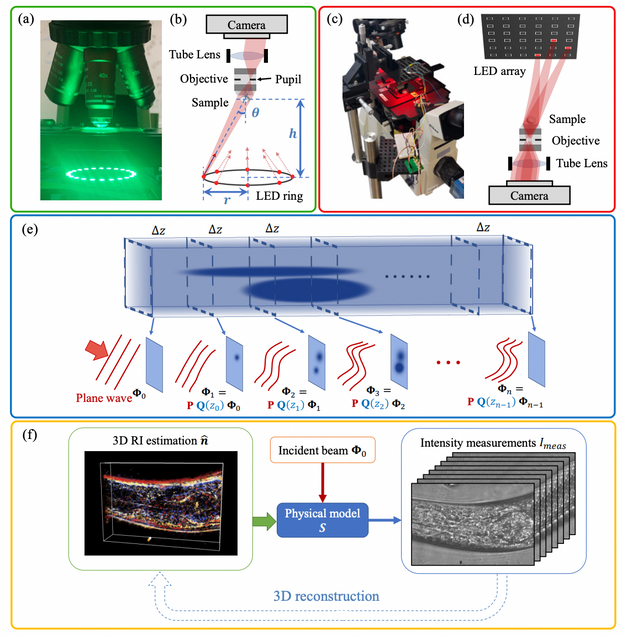

High-fidelity intensity diffraction tomography with a non-paraxial multiple-scattering model

Jiabei Zhu, Hao Wang, Lei Tian

Optics Express Vol. 30, Issue 18, pp. 32808-32821 (2022).

We propose a novel intensity diffraction tomography (IDT) reconstruction algorithm based on the split-step non-paraxial (SSNP) model for recovering the 3D refractive index (RI) distribution of multiple-scattering biological samples. High-quality IDT reconstruction requires high-angle illumination to encode both low- and high- spatial frequency information of the 3D biological sample. We show that our SSNP model can more accurately compute multiple scattering from high-angle illumination compared to paraxial approximation-based multiple-scattering models. We apply this SSNP model to both sequential and multiplexed IDT techniques. We develop a unified reconstruction algorithm for both IDT modalities that is highly computationally efficient and is implemented by a modular automatic differentiation framework. We demonstrate the capability of our reconstruction algorithm on both weakly scattering buccal epithelial cells and strongly scattering live

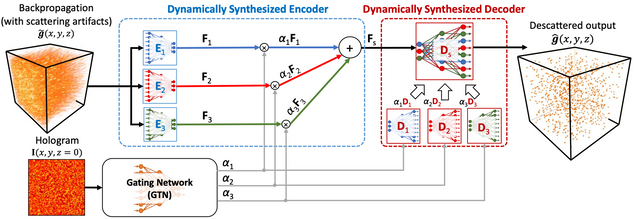

Adaptive 3D descattering with a dynamic synthesis network

Waleed Tahir, Hao Wang, Lei Tian

Light: Science & Applications 11, 42, 2022

Deep learning has been broadly applied to imaging in scattering applications. A common framework is to train a “descattering” neural network for image recovery by removing scattering artifacts. To achieve the best results on a broad spectrum of scattering conditions, individual “expert” networks have to be trained for each condition. However, the performance of the expert sharply degrades when the scattering level at the testing time differs from the training. An alternative approach is to train a “generalist” network using data from a variety of scattering conditions. However, the generalist generally suffers from worse performance as compared to the expert trained for each scattering condition. Here, we develop a drastically different approach, termed dynamic synthesis network (DSN), that can dynamically adjust the model weights and adapt to different scattering conditions. The adaptability is achieved by a novel architecture that enables dynamically synthesizing a network by blending multiple experts using a gating network. Notably, our DSN adaptively removes scattering artifacts across a continuum of scattering conditions regardless of whether the condition has been used for the training, and consistently outperforms the generalist. By training the DSN entirely on a multiple-scattering simulator, we experimentally demonstrate the network’s adaptability and robustness for 3D descattering in holographic 3D particle imaging. We expect the same concept can be adapted to many other imaging applications, such as denoising, and imaging through scattering media. Broadly, our dynamic synthesis framework opens up a new paradigm for designing highly adaptive deep learning and computational imaging techniques.

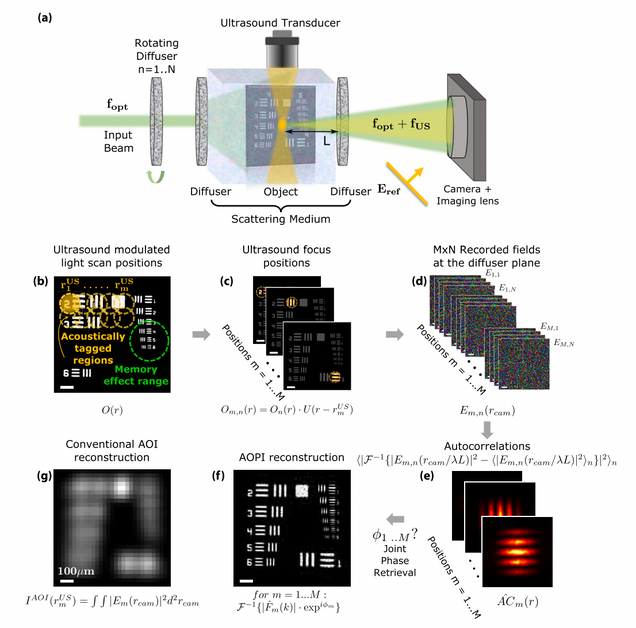

Acousto-optic ptychography

M. Rosenfeld, G. Weinberg, D. Doktofsky, Y. Li, L. Tian, O. Katz

Optica 8, 936-943 (2021).

Acousto-optic imaging (AOI) enables optical-contrast imaging deep inside scattering samples via localized ultrasound-modulation of scattered light. While AOI allows optical investigations at depths, its imaging resolution is inherently limited by the ultrasound wavelength, prohibiting microscopic investigations. Here, we propose a computational imaging approach that allows optical diffraction-limited imaging using a conventional AOI system. We achieve this by extracting diffraction-limited imaging information from speckle correlations in the conventionally detected ultrasound-modulated scattered-light fields. Specifically, we identify that since “memory-effect” speckle correlations allow estimation of the Fourier magnitude of the field inside the ultrasound focus, scanning the ultrasound focus enables robust diffraction-limited reconstruction of extended objects using ptychography (i.e., we exploit the ultrasound focus as the scanned spatial-gate probe required for ptychographic phase retrieval). Moreover, we exploit the short speckle decorrelation-time in dynamic media, which is usually considered a hurdle for wavefront-shaping- based approaches, for improved ptychographic reconstruction. We experimentally demonstrate noninvasive imaging of targets that extend well beyond the memory-effect range, with a 40-times resolution improvement over conventional AOI.

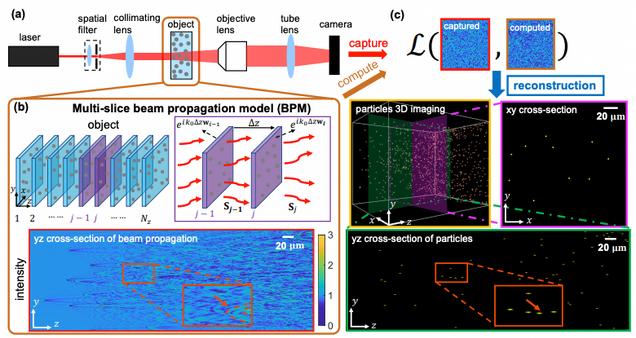

Large-scale holographic particle 3D imaging with the beam propagation model

Hao Wang, Waleed Tahir, Jiabei Zhu, Lei Tian

Opt. Express 29, 17159-17172 (2021).

We develop a novel algorithm for large-scale holographic reconstruction of 3D particle fields. Our method is based on a multiple-scattering beam propagation method (BPM) combined with sparse regularization that enables recovering dense 3D particles of high refractive index contrast from a single hologram. We show that the BPM-computed hologram generates intensity statistics closely matching with the experimental measurements and provides up to 9× higher accuracy than the single-scattering model. To solve the inverse problem, we devise a computationally efficient algorithm, which reduces the computation time by two orders of magnitude as compared to the state-of-the-art multiple-scattering-based technique. We demonstrate superior reconstruction accuracy in both simulations and experiments under different scattering strengths. We show that the BPM reconstruction significantly outperforms the single-scattering method in particular for deep imaging depths and high particle densities.

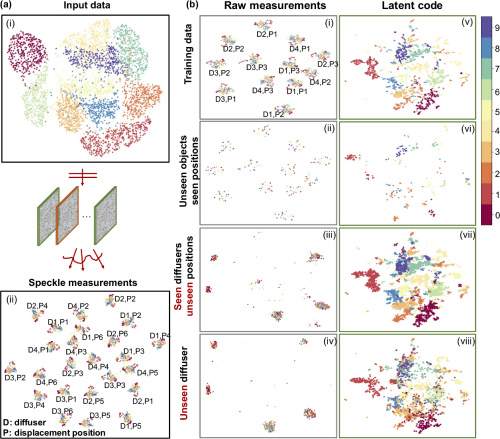

Displacement-agnostic coherent imaging through scatter with an interpretable deep neural network

Y Li, S Cheng, Y Xue, L Tian

Optics Express Vol. 29, Issue 2, pp. 2244-2257 (2021).

Coherent imaging through scatter is a challenging task. Both model-based and data-driven approaches have been explored to solve the inverse scattering problem. In our previous work, we have shown that a deep learning approach can make high-quality and highly generalizable predictions through unseen diffusers. Here, we propose a new deep neural network model that is agnostic to a broader class of perturbations including scatterer change, displacements, and system defocus up to 10× depth of field. In addition, we develop a new analysis framework for interpreting the mechanism of our deep learning model and visualizing its generalizability based on an unsupervised dimension reduction technique. We show that our model can unmix the scattering-specific information and extract the object-specific information and achieve generalization under different scattering conditions. Our work paves the way to a robust and interpretable deep learning approach to imaging through scattering media.

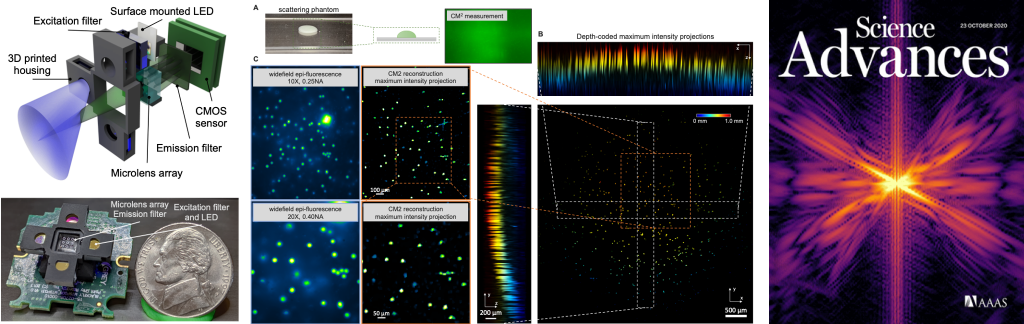

Single-Shot 3D Widefield Fluorescence Imaging with a Computational Miniature Mesoscope

Yujia Xue, Ian G. Davison, David A. Boas, Lei Tian

Science Advances 21 OCT 2020: EABB7508

⭑ On the Cover

⭑ In the news:

– BU ENG news: Brain Imaging Scaled Down

Fluorescence microscopes are indispensable to biology and neuroscience. The need for recording in freely behaving animals has further driven the development in miniaturized microscopes (miniscopes). However, conventional microscopes/miniscopes are inherently constrained by their limited space-bandwidth product, shallow depth of field (DOF), and inability to resolve three-dimensional (3D) distributed emitters. Here, we present a Computational Miniature Mesoscope (CM2) that overcomes these bottlenecks and enables single-shot 3D imaging across an 8 mm by 7 mm field of view and 2.5-mm DOF, achieving 7-μm lateral resolution and better than 200-μm axial resolution. The CM2 features a compact lightweight design that integrates a microlens array for imaging and a light-emitting diode array for excitation. Its expanded imaging capability is enabled by computational imaging that augments the optics by algorithms. We experimentally validate the mesoscopic imaging capability on 3D fluorescent samples. We further quantify the effects of scattering and background fluorescence on phantom experiments.

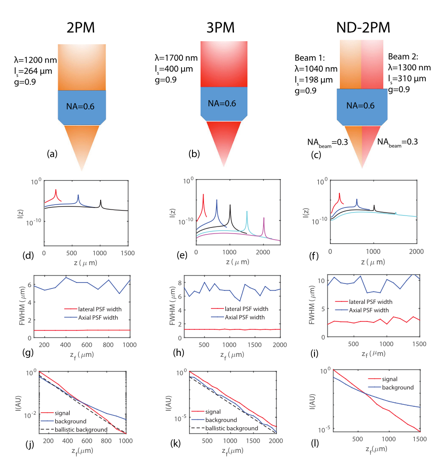

Comparing the fundamental imaging depth limit of two-photon, three-photon, and non-degenerate two-photon microscopy

Xiaojun Cheng, Sanaz Sadegh, Sharvari Zilpelwar, Anna Devor, Lei Tian, and David A. Boas

Opt. Lett. Vol. 45, Issue 10, pp. 2934-2937 (2020).

We have systematically characterized the degradation of imaging quality with depth in deep brain multi-photon microscopy, utilizing our recently developed numerical model that computes wave propagation in scattering media. The signal-to-background ratio (SBR) and the resolution determined by the width of the point spread function are obtained as functions of depth. We compare the imaging quality of two-photon (2PM), three-photon (3PM), and non-degenerate two-photon microscopy (ND-2PM) for mouse brain imaging. We show that the imaging depth of 2PM and ND-2PM are fundamentally limited by the SBR, while the SBR remains approximately invariant with imaging depth for 3PM. Instead, the imaging depth of 3PM is limited by the degradation of the resolution, if there is sufficient laser power to maintain the signal level at large depth. The roles of the concentration of dye molecules, the numerical aperture of the input light, the anisotropy factor , noise level, input laser power, and the effect of temporal broadening are also discussed.

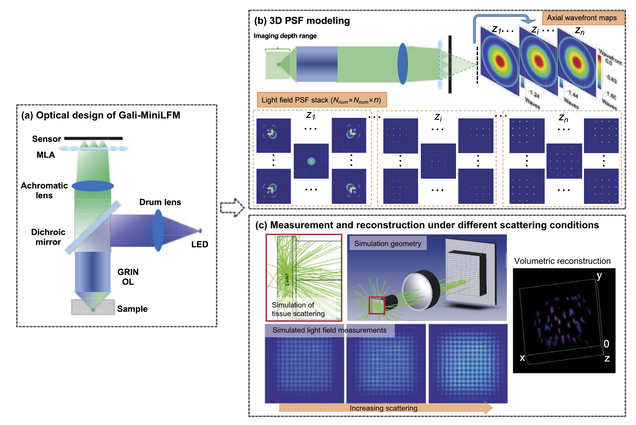

Design of a high-resolution light field miniscope for volumetric imaging in scattering tissue

Yanqin Chen, Bo Xiong, Yujia Xue, Xin Jin, Joseph Greene, and Lei Tian

Biomedical Optics Express 11, pp. 1662-1678 (2020).

Integrating light field microscopy techniques with existing miniscope architectures has allowed for volumetric imaging of targeted brain regions in freely moving animals. However, the current design of light field miniscopes is limited by non-uniform resolution and long imaging path length. In an effort to overcome these limitations, this paper proposes an optimized Galilean-mode light field miniscope (Gali-MiniLFM), which achieves a more consistent resolution and a significantly shorter imaging path than its conventional counterparts. In addition, this paper provides a novel framework that incorporates the anticipated aberrations of the proposed Gali-MiniLFM into the point spread function (PSF) modeling. This more accurate PSF model can then be used in 3D reconstruction algorithms to further improve the resolution of the platform. Volumetric imaging in the brain necessitates the consideration of the effects of scattering. We conduct Monte Carlo simulations to demonstrate the robustness of the proposed Gali-MiniLFM for volumetric imaging in scattering tissue.

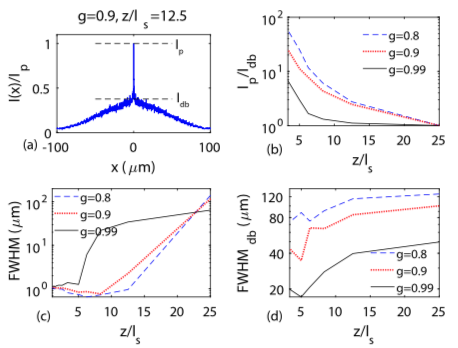

Development of a beam propagation method to simulate the point spread function degradation in scattering media

Xiaojun Cheng, Yunzhe Li, Jerome Mertz, Sava Sakadžić, Anna Devor, David A. Boas, Lei Tian

Opt. Lett. 44, 4989-4992 (2019).

Scattering is one of the main issues that limit the imaging depth in deep tissue optical imaging. To characterize the role of scattering, we have developed a forward model based on the beam propagation method and established the link between the macroscopic optical properties of the media and the statistical parameters of the phase masks applied to the wavefront. Using this model, we have analyzed the degradation of the point-spread function of the illumination beam in the transition regime from ballistic to diffusive light transport. Our method provides a wave-optic simulation toolkit to analyze the effects of scattering on image quality degradation in scanning microscopy.

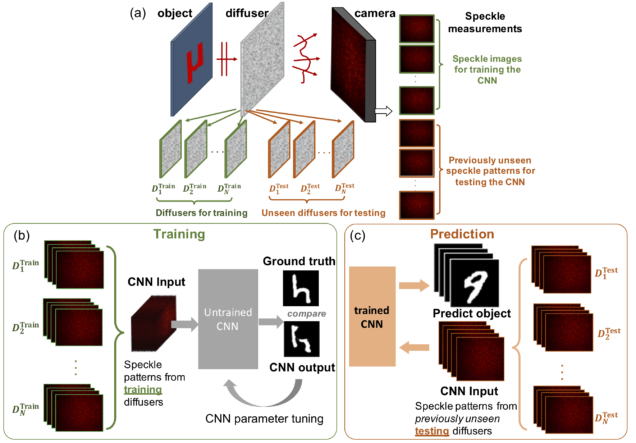

Deep speckle correlation: a deep learning approach towards scalable imaging through scattering media

Yunzhe Li, Yujia Xue, Lei Tian

Optica 5, 1181-1190 (2018).

⭑ Top 5 most cited articles in Optica published in 2018 (Source: Google Scholar)

Imaging through scattering is an important yet challenging problem. Tremendous progress has been made by exploiting the deterministic input–output “transmission matrix” for a fixed medium. However, this “one-to-one” mapping is highly susceptible to speckle decorrelations – small perturbations to the scattering medium lead to model errors and severe degradation of the imaging performance. Our goal here is to develop a new framework that is highly scalable to both medium perturbations and measurement requirement. To do so, we propose a statistical “one-to-all” deep learning (DL) technique that encapsulates a wide range of statistical variations for the model to be resilient to speckle decorrelations. Specifically, we develop a convolutional neural network (CNN) that is able to learn the statistical information contained in the speckle intensity patterns captured on a set of diffusers having the same macroscopic parameter. We then show for the first time, to the best of our knowledge, that the trained CNN is able to generalize and make high-quality object predictions through an entirely different set of diffusers of the same class. Our work paves the way to a highly scalable DL approach for imaging through scattering media.

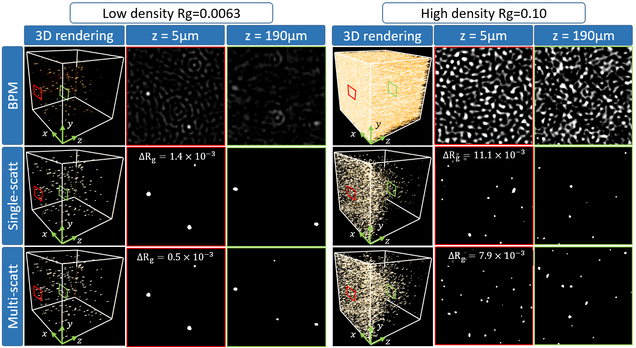

Holographic particle-localization under multiple scattering

Waleed Tahir, Ulugbek S. Kamilov, Lei Tian

Advanced Photonics, 1(3), 036003 (2019).

We introduce a computational framework that incorporates multiple scattering for large-scale three-dimensional (3-D) particle localization using single-shot in-line holography. Traditional holographic techniques rely on single-scattering models that become inaccurate under high particle densities and large refractive index contrasts. Existing multiple scattering solvers become computationally prohibitive for large-scale problems, which comprise millions of voxels within the scattering volume. Our approach overcomes the computational bottleneck by slicewise computation of multiple scattering under an efficient recursive framework. In the forward model, each recursion estimates the next higher-order multiple scattered field among the object slices. In the inverse model, each order of scattering is recursively estimated by a nonlinear optimization procedure. This nonlinear inverse model is further supplemented by a sparsity promoting procedure that is particularly effective in localizing 3-D distributed particles. We show that our multiple-scattering model leads to significant improvement in the quality of 3-D localization compared to traditional methods based on single scattering approximation. Our experiments demonstrate robust inverse multiple scattering, allowing reconstruction of 100 million voxels from a single 1-megapixel hologram with a sparsity prior. The performance bound of our approach is quantified in simulation and validated experimentally. Our work promises utilization of multiple scattering for versatile large-scale applications.

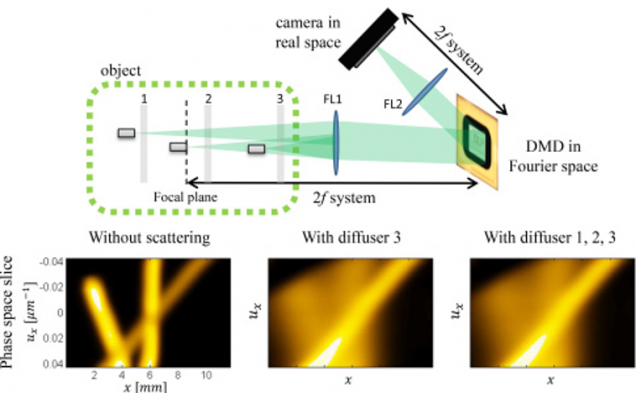

3D imaging in volumetric scattering media using phase-space measurements

H. Liu, E. Jonas, L. Tian, J. Zhong, B. Recht, L. Waller

Opt. Express 23, 14461-14471 (2015).

We demonstrate the use of phase-space imaging for 3D localization of multiple point sources inside scattering material. The effect of scattering is to spread angular (spatial frequency) information, which can be measured by phase space imaging. We derive a multi-slice forward model for homogenous volumetric scattering, then develop a reconstruction algorithm that exploits sparsity in order to further constrain the problem. By using 4D measurements for 3D reconstruction, the dimensionality mismatch provides significant robustness to multiple scattering, with either static or dynamic diffusers. Experimentally, our high-resolution 4D phase-space data is collected by a spectrogram setup, with results successfully recovering the 3D positions of multiple LEDs embedded in turbid scattering media.

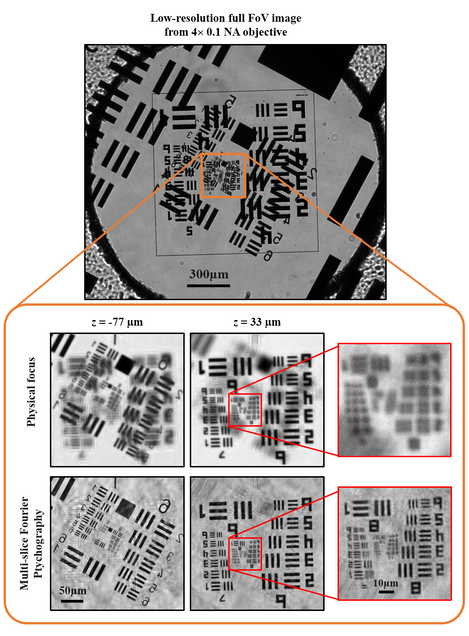

3D intensity and phase imaging from light field measurements in an LED array microscope

Lei Tian, L. Waller

Optica 2, 104-111 (2015).

⭑ the 15 Most Cited Articles in Optica published in 2015 (Source: OSA, 2019)

Realizing high resolution across large volumes is challenging for 3D imaging techniques with high-speed acquisition. Here, we describe a new method for 3D intensity and phase recovery from 4D light field measurements, achieving enhanced resolution via Fourier Ptychography. Starting from geometric optics light field refocusing, we incorporate phase retrieval and correct diffraction artifacts. Further, we incorporate dark-field images to achieve lateral resolution beyond the diffraction limit of the objective (5x larger NA) and axial resolution better than the depth of field, using a low magnification objective with a large field of view. Our iterative reconstruction algorithm uses a multi-slice coherent model to estimate the 3D complex transmittance function of the sample at multiple depths, without any weak or single-scattering approximations. Data is captured by an LED array microscope with computational illumination, which enables rapid scanning of angles for fast acquisition. We demonstrate the method with thick biological samples in a modified commercial microscope, indicating the technique’s versatility for a wide range of applications.