Computational imaging with metasurface

Chiral phase-imaging meta-sensors

Ahmet M. Erturan , Jianing Liu , Maliheh A. Roueini , Nicolas Malamug , Lei Tian & Roberto Paiella

Nanophotonics (2025).

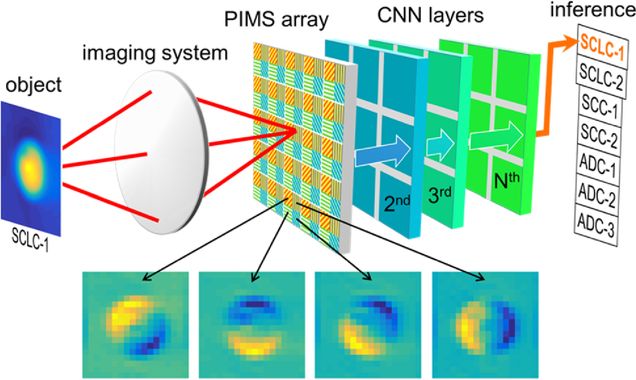

Cell classification with phase-imaging meta-sensors

Haochuan Hu, Jianing Liu, Lei Tian, Janusz Konrad, and Roberto Paiella

Optics Letters Vol. 49, Issue 20, pp. 5759-5762 (2024).

The development of photonic technologies for machine learning is a promising avenue toward reducing the computational cost of image classification tasks. Here we investigate a convolutional neural network (CNN) where the first layer is replaced by an image sensor array consisting of recently developed angle-sensitive metasurface photodetectors. This array can visualize transparent phase objects directly by recording multiple anisotropic edge-enhanced images, analogous to the feature maps computed by the first convolutional layer of a CNN. The resulting classification performance is evaluated for a realistic task (the identification of transparent cancer cells from seven different lines) through computational-imaging simulations based on the measured angular characteristics of prototype devices. Our results show that this hybrid optoelectronic network can provide accurate classification (>90%) similar to its fully digital baseline CNN but with an order-of-magnitude reduction in the number of calculations.

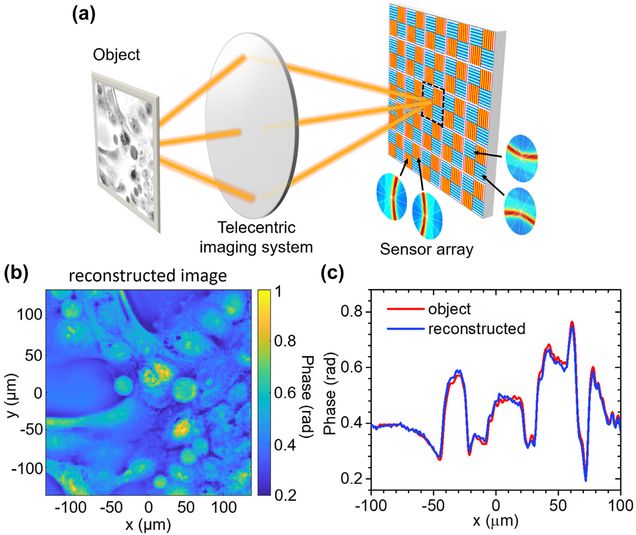

Asymmetric metasurface photodetectors for single-shot quantitative phase imaging

Jianing Liu , Hao Wang , Yuyu Li , Lei Tian, Roberto Paiella

Nanophotonics (2023).

The visualization of pure phase objects by wavefront sensing has important applications ranging from surface profiling to biomedical microscopy, and generally requires bulky and complicated setups involving optical spatial filtering, interferometry, or structured illumination. Here we introduce a new type of image sensors that are uniquely sensitive to the local direction of light propagation, based on standard photodetectors coated with a specially designed plasmonic metasurface that creates an asymmetric dependence of responsivity on angle of incidence around the surface normal. The metasurface design, fabrication, and angle-sensitive operation are demonstrated using a simple photoconductive detector platform. The measurement results, combined with computational imaging calculations, are then used to show that a standard camera or microscope based on these metasurface pixels can directly visualize phase objects without any additional optical elements, with state-of-the-art minimum detectable phase contrasts below 10 mrad. Furthermore, the combination of sensors with equal and opposite angular response on the same pixel array can be used to perform quantitative phase imaging in a single shot, with a customized reconstruction algorithm which is also developed in this work. By virtue of its system miniaturization and measurement simplicity, the phase imaging approach enabled by these devices is particularly significant for applications involving space-constrained and portable setups (such as point-of-care imaging and endoscopy) and measurements involving freely moving objects.

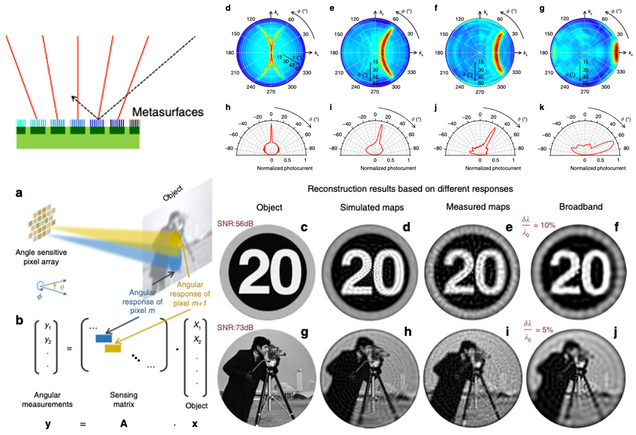

Optical spatial filtering with plasmonic directional image sensors

Jianing Liu, Hao Wang, Leonard C. Kogos, Yuyu Li, Yunzhe Li, Lei Tian, and Roberto Paiella

Optics Express Vol. 30, Issue 16, pp. 29074-29087 (2022).

⭑ Editors’ pick

Photonics provides a promising approach for image processing by spatial filtering, with the advantage of faster speeds and lower power consumption compared to electronic digital solutions. However, traditional optical spatial filters suffer from bulky form factors that limit their portability. Here we present a new approach based on pixel arrays of plasmonic directional image sensors, designed to selectively detect light incident along a small, geometrically tunable set of directions. The resulting imaging systems can function as optical spatial filters without any external filtering elements, leading to extreme size miniaturization. Furthermore, they offer the distinct capability to perform multiple filtering operations at the same time, through the use of sensor arrays partitioned into blocks of adjacent pixels with different angular responses. To establish the image processing capabilities of these devices, we present a rigorous theoretical model of their filter transfer function under both coherent and incoherent illumination. Next, we use the measured angle-resolved responsivity of prototype devices to demonstrate two examples of relevant functionalities: (1) the visualization of otherwise invisible phase objects and (2) spatial differentiation with incoherent light. These results are significant for a multitude of imaging applications ranging from microscopy in biomedicine to object recognition for computer vision.

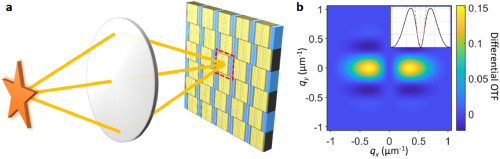

Plasmonic ommatidia for lensless compound-eye vision

Leonard C. Kogos, Yunzhe Li, Jianing Liu, Yuyu Li, Lei Tian & Roberto Paiella

Nature Communications 11: 1637 (2020).

⭑ In the news:

– BU ENG news: A Bug’s-Eye View

The vision system of arthropods such as insects and crustaceans is based on the compound-eye architecture, consisting of a dense array of individual imaging elements (ommatidia) pointing along different directions. This arrangement is particularly attractive for imaging applications requiring extreme size miniaturization, wide-angle fields of view, and high sensitivity to motion. However, the implementation of cameras directly mimicking the eyes of common arthropods is complicated by their curved geometry. Here, we describe a lensless planar architecture, where each pixel of a standard image-sensor array is coated with an ensemble of metallic plasmonic nanostructures that only transmits light incident along a small geometrically-tunable distribution of angles. A set of near-infrared devices providing directional photodetection peaked at different angles is designed, fabricated, and tested. Computational imaging techniques are then employed to demonstrate the ability of these devices to reconstruct high-quality images of relatively complex objects.