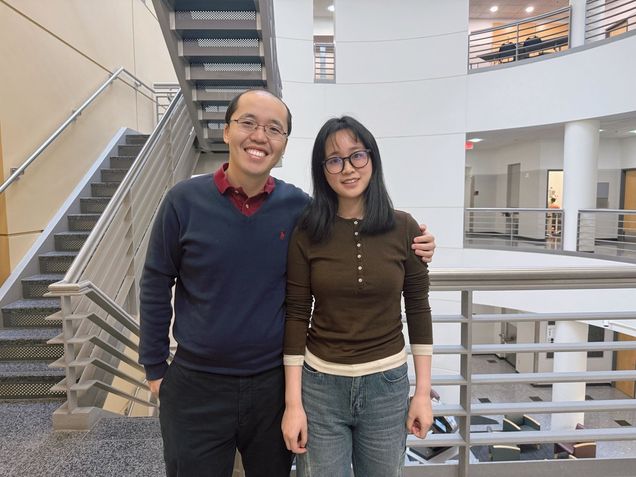

11th PhD from Tian Lab: Qianwan Yang

Title: Advancing Computational Multi-Aperture Fluorescence Microscopy via Model- and Learning-Based Techniques

Time: Tuesday, December 2, 2025, 10:00 a.m. – 12:00 p.m.

Advisor: Professor Lei Tian

Committee: Professor Vivek Goyal, Professor Jerome Mertz, Professor Janusz Konrad, Professor Lei Tian

Google Scholar:

https://scholar.google.com/citations?user=R9vLRnYAAAAJ&hl=en

Abstract

Traditional fluorescence microscopy faces inherent trade-offs among resolution, field of view (FOV), and system complexity. Computational Miniature Mesoscope (CM2) addresses this by placing a microlens array (MLA) before a single CMOS sensor: each microlens captures a sub-FOV, and intentional overlap maximizes usable FOV, encoding extended high-resolution scenes on a compact device. This optical simplicity shifts complexity to computation: the periodic MLA induces ill-conditioned multiplexed measurement, and the system exhibits highly non-local, spatially varying PSFs. This dissertation explicitly models that spatial variance (SV) process and solves the associated inverse problem, enabling uniform, wide FOV reconstructions from miniature hardware.

First, I established a low-rank LSV forward model. Sparse 3D PSFs are factorized via truncated singular value decomposition (TSVD) to yield a compact basis, and the corresponding coefficient maps are smoothly interpolated to capture field-dependent variation. Based on this forward model, I pose the inverse problem as a regularized least-squares objective and develop a model-based LSV ADMM solver that improves peripheral fidelity and overall reconstruction quality compared with a linear shift invariant (LSI) ADMM solver. The LSV ADMM solver serves as the reconstruction baseline, while the LSV forward model provides a high-fidelity simulator to generate diverse measurements for training and benchmarking learning-based methods. Building on this foundation, I introduce CM2Net, a multi-stage deep learning framework that combines view demixing, view synthesis, and refocusing-enhancement to perform single-shot 3D particle localization. Trained purely on simulated measurements, CM2Net generalizes to experiments, achieving 27 mm FOV over 0.8 mm depth with 26 μm lateral and 25 μm axial resolution, while delivering 1400× faster runtime and 19× lower peak memory than the iterative baseline. To overcome the locality bias and implicit shift-invariance of conventional CNNs and to generalize reconstruction to extended biological structures, I propose SVFourierNet, which performs global, low-rank deconvolution in the Fourier domain using learned filters that approximate the inverse of the LSV forward model. SVFourierNet attains uniform 7.8 μm resolution across a 6.5 mm FOV and shows interpretable agreement between its effective receptive fields and the physical PSFs. Finally, I introduce SV-CoDe, a scalable SV coordinate-conditioned deconvolution framework. By modulating compact convolutional features with coordinate-driven coefficients predicted by a lightweight MLP, SV-CoDe improves peripheral fidelity and decouples parameter and memory costs from FOV size, enabling SV reconstruction with scalable computation.

Together, the calibrated low-rank LSV model with an LSV–ADMM baseline, CM2Net, SV-FourierNet, and SV-CoDe unify physics and learning to resolve view multiplexing and spatially varying blur, enabling uniform, wide-FOV, high-throughput fluorescence imaging on compact hardware.