Waleed defended PhD Dissertation!

Congratulations, Waleed!

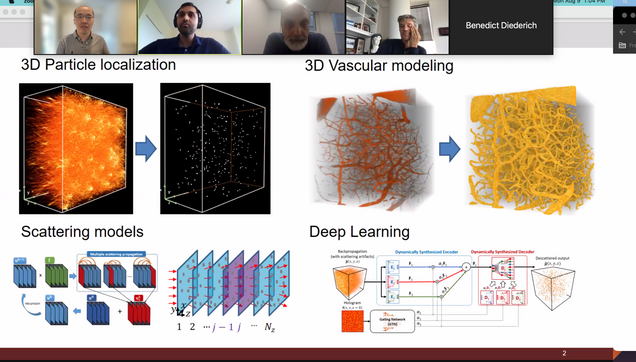

Title: Deep learning for large-scale holographic 3D particle localization and two-photon angiography segmentation

Presenter: Waleed Tahir

Date: August 9th, 2021

Time: 1:00 PM – 3:00 PM

Advisor: Professor Lei Tian (ECE, BME)

Chair: Professor Hamid Nawab (ECE, BME)

Committee: Professor Vivek Goyal (ECE), Professor David Boas (BME, ECE), Professor Jerome Mertz (BME, ECE, Physics)

Abstract:

Digital inline holography (DIH) is a popular imaging technique, in which an unknown 3D object can be estimated from a single 2D intensity measurement, also known as the hologram. One well known application of DIH is 3D particle localization, which has found numerous use cases in the areas of biological sample characterization and optical measurement. Traditional techniques for DIH rely on linear models of light scattering, that only account for single scattering of light, and completely ignore the multiple scattering among scatterers. This assumption of linear models becomes inaccurate under high particle densities and large refractive index contrasts. Incorporating multiple scattering into the estimation process has shown to improve reconstruction accuracy in numerous imaging modalities, however, existing multiple scattering solvers become computationally prohibitive for large-scale problems comprising of millions of voxels within the scattering volume.

This thesis addresses this limitation by introducing computationally efficient frameworks that are able to effectively account for multiple scattering in the reconstruction process for large-scale 3D data. We demonstrate the effectiveness of the proposed schemes on a DIH setup for 3D particle localization, and show that incorporating multiple scattering significantly improves the localization performance compared to traditional single scattering based approaches. First, we discuss a scheme in which multiple scattering is computed using the iterative Born approximation by dividing the 3D volume into discrete 2D slices, and computing the scattering among them. This method makes it feasible to compute multiple scattering for large volumes, and significantly improves 3D particle localization compared to traditional methods.

One limitation of the aforementioned method, however, was that the multiple scattering computations were unable to converge when the sample under consideration was strongly scattering. This limitation stemmed from the method’s dependence on the iterative Born approximation, which assumes the samples to be weakly scattering. This challenge is addressed in our following work, where we incorporate an alternative multiple scattering model that is able to effectively account for strongly scattering samples without irregular convergence properties. We demonstrate the improvement of the proposed method over linear scattering models for 3D particle localization, and statistically show that it is able to accurately model the hologram formation process.

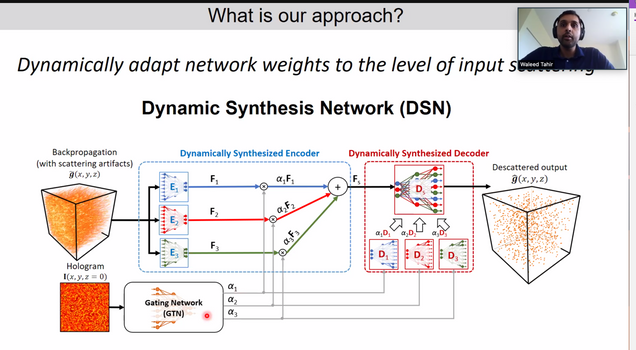

Following this work, we address an outstanding challenge faced by many imaging applications, related to descattering, or removal of scattering artifacts. While deep neural networks (DNNs) have become the state-of-the-art for descattering in many imaging modalities, generally multiple DNNs have to be trained for this purpose if the range of scattering artifact levels is very broad. This is because for optimal descattering performance, it has been shown that each network has to be specialized for a narrow range of scattering artifact levels. We address this challenge by presenting a novel DNN framework that is able to dynamically adapt its network parameters to the level of scattering artifacts at the input, and demonstrate optimal descattering performance without the need of training multiple DNNs. We demonstrate our technique on a DIH setup for 3D particle localization, and show that even when trained on purely simulated data, the networks is able to demosntrate improved localization on both simulated and experimental data, compared to existing methods.

Finally, we consider the problem of 3D segmentation and localization of blood vessels from large-scale two-photon microscopy (2PM) angiograms of the mouse brain. 2PM is a widely adapted imaging modality for 3D neuroimaging. The localization of brain vasculature from 2PM angiograms, and its subsequent mathematical modeling, has broad implications in the fields of disease diagnosis and drug development. Vascular segmentation is generally the first step in the localization process, in which blood vessels are separated from the background. Due to the rapid decay in the 2PM signal quality with increasing imaging depth, the segmentation and localization of blood vessels from 2PM angiograms remains problematic, especially for deep vasculature. In this work, we introduce a high throughput DNN, with a semi-supervised loss function, which not only is able to localize much deeper vasculature compared to existing methods, but also does so with greater accuracy and speed.