Neural Prosthetics for Speech Restoration

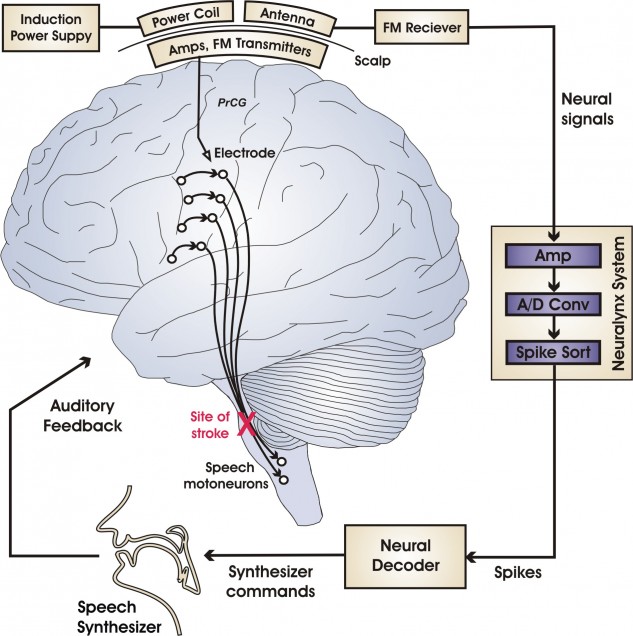

In collaboration with Dr. Philip Kennedy and colleagues at Neural Signals, Inc., we created the world’s first brain-computer interface for real-time speech generation in 2007. This system decoded neural signals recorded from an electrode implanted in the speech motor cortex of a human volunteer with locked-in syndrome (characterized by full consciousness with near-complete paralysis) while he imagined speaking. This work received widespread coverage in the popular media, including pieces by CNN, the Boston Globe, and Esquire Magazine. A schematic of the brain-computer interface is provided below; further details about the system can be found in Guenther et al. (2009).

Schematic of a brain-computer interface for real-time speech generation (Guenther et al., 2009).

In more recent work, we developed a novel speech synthesizer that provides natural-sounding speech using a simple 2-dimensional input that can be generated via brain signals or muscle signals collected with electromyography (EMG). The video below, created by members of the Stepp Lab at Boston University, shows a user controlling this synthesizer via EMG signals from the face.

Publications

- Cler M.J., Nieto-Castanon A., Guenther F.H., Fager, S., and Stepp C.E. (2016). Surface electromyographic control of a novel phonemic interface for speech synthesis. Augmentative and Alternative Communication, 32, pp. 120-130. NIHMSID: NIHMS802522

- Brumberg, J.S., Wright, E.J., Andreasen, D.S., Guenther, F.H., and Kennedy, P.R. (2011). Classification of intended phoneme production from chronic intracortical microelectrode recordings in speech motor cortex. Frontiers in Neuroscience, 5, Article 65. PMCID: PMC3096823

- Brumberg, J.S., Nieto-Castanon, A., Kennedy, P.R. and Guenther, F.H. (2010) Brain-computer interfaces for speech communication. Speech Communication, 52 (4), 367-379. PMCID:PMC2829990

- Guenther, F.H., Brumberg, J.S., Wright, E.J., Nieto-Castanon, A., Tourville, J.A., Panko, M., Law, R., Siebert, S.A., Bartels, J.L., Andreasen, D.S., Ehirim, P., Mao, H., and Kennedy, P.R. (2009). A wireless brain-machine interface for real-time speech synthesis. PLoS ONE, 4 (12), e8218. PMCID:PMC2784218