Category: Javascript

Using JWTs for Authentication in RESTful Applications

The problem

Applications built using the MEAN stack typically use Node, MongoDB and Express on the back end to implement business logic fronted by a RESTful interface. Most of the work is done on the back end, and Angular serves as an enhanced view in the MVC (model-view-controller) pattern. Keeping business rules and logic on the back end means that the application is view-agnostic; switching from Angular to React or straight jQuery or PHP should result in the same functionality.

It’s often the case that we need to protect some back-end routes, making them available only to authenticated users. The challenge is that our back-end services should be stateless, which means that we need a way for front-end code to provide proof of authentication at each request. At the same time, we can’t trust any front-end code, since it is out of our control. We need an irrefutable mechanism for proving authentication that is entirely managed on the back end. We also want the mechanism to be out of the control of the client code, and done in such a way that it would be difficult or impossible to spoof.

The solution

JSON Web Tokens (JWTs) are a good solution for these requirements. The token is basically a JavaScript object in three parts:

- A header that contains information about the algorithms used to generate the token

- A body with one or more claims

- A cryptographic signature based on the header and body

JWTs are formally described in RFC7519. There’s nothing inherently authentication-y about them — they are a mechanism to encapsulate and transmit data between two parties that ensures the integrity of the information. We can leverage this to give clients a way to prove their status without involving the client at all. Here’s the flow:

- Client authenticates with the server (or via a third-party such as an OAuth provider)

- Server creates a signed JWT describing authentication status and authorized roles using a secret that only the server knows

- Server returns JWT to client in a session cookie marked httpOnly

- At each request the client automatically sends the cookie and the enclosed JWT to the server

- Server validates the JWT on each request and decides whether to allow client access to protected resources, returning either the requeseted resource or an error status

Using a cookie to transmit the JWT provides a simple, automated way to pass the token back and forth between the client and the server and also gives the server control over the lifecycle of the cookie. Marking the cookie httpOnly means that it is unavailable to client functions. And, since the token is signed using a secret known only to the server, it is difficult or impossible to spoof the claims in the token.

The implementation discussed in this article uses a simple hash-based signing method. The header and body of the JWT are Base64 encoded, and then the encoded header and body, along with a server-side secret, are hashed to produce a signature. Another option is to use a public/private key pair to sign and verify the JWT. In the example, the JWT is handled only on the server, and so there’s no benefit to using a signing key.

JWT authorization in code

Let’s take a look at some code that implements our workflow. The application that I’m using in the following examples relies on third-party OAuth authentication from Twitter, and minimal profile information is held over for a user from session to session. The Twitter access token returned after a successful authentication is used as a key to a user record in a mongoDB database. The token exists until the user logs out or the user re-authenticates after having closed the browser window (thus invalidating the session cookie containing the JWT). Note that I’ve simplified error handling for readability.

Dependencies

Two convenience packages are used in the following code examples:

- cookie-parser – Express middleware to simplify cookie handling

- jsonwebtoken – abstracts signing and validation of JWTs, based on the node-jws package

I also use Mongoose as a layer on top of mongoDB; it provides ODM via schemas and also several handy query methods.

Creating the JWT and placing in in a session cookie

Once authentication with Twitter completes, Twitter invokes a callback method on the application, passing back an access token and secret, and information about the user such as their Twitter ID and screen name (passed in the results object). Relevant information about the user is stored in a database document:

User.findOneAndUpdate( {twitterID: twitterID},

{

twitterID: twitterID,

name: results.screen_name,

username: results.screen_name,

twitterAccessToken: oauth_access_token,

twitterAccessTokenSecret: oauth_access_token_secret

},

{'upsert': 'true'},

function (err, result) {

if (err) {

console.log(err)

}

else {

console.log("Updated", results.screen_name, "in database.")

}

})

The upsert option directs mongoDB to create a document if it not present, otherwise it updates an existing document.

Next, a JWT is assembled. The jsonwebtoken package takes care of creating the header of the JWT, so we just fill in the body with the Twitter access token. It is the access token that we’ll use to find the user in the database during authorization checks.

const jwtPayload = {

twitterAccessToken: oauth_access_token

}

The JWT is then signed.

const authJwtToken = jwt.sign(jwtPayload, jwtConfig.jwtSecret)

jwtSecret is a string, and can be either a single value used for all users (as it is in this application) or a per-user value, in which case it must be stored along with the user record. A strategy for per-user secrets might be to use the OAuth access token secret returned by Twitter, although it introduces a small risk if the response from Twitter has been intercepted. A concatenation of the Twitter secret and a server secret would be a good option. The secret is used during validation of the signature when authorizing a client’s request. Since it is stored on the server and never shared with the client, it is an effective way to verify that a token presented by a client was in fact signed by the server.

The signed JWT is placed on a cookie. The cookie is marked httpOnly, which restricts visibility on the client, and its expiration time is set to zero, making it a session-only cookie.

const cookieOptions = {

httpOnly: true,

expires: 0

}

res.cookie('twitterAccessJwt', authJwtToken, cookieOptions)

Keep in mind that the cookie isn’t visible to client-side code, so if you need a way to tell the client that the user is authenticated you’ll want to add a flag to another, visible, cookie or otherwise pass data indicating authorization status back to the client.

Why a cookie and a JWT?

We certainly could send the JWT back to the client as an ordinary object, and use the data it contains to drive client-side code. The payload is not encrypted, just Base64 encoded, and would thus be accessible to the client. It could be placed on the session for transport to and from the server, though this would have to be done on each request-response pair, on both the sever and the client, since this kind of session variable is not automatically passed back and forth.

Cookies, on the other hand, are automatically sent with each request and each response without any additional action. As long as the cookie hasn’t expired or been deleted it will accompany each request back to the server. Further, marking the cookie httpOnly hides it from client-side code, reducing the opportunity for it to be tampered with. This particular cookie is only used for authorization, so there’s no need for the client to see it or interact with it.

Authorizing requests

At this point we’ve handed the client an authorization token that has been signed by the server. Each time the client makes a request to the back-end API, the token is passed inside a session cookie. Remember, the server is stateless, and so we need to verify the authenticity of the token on each request. There are two steps in the process:

- Check the signature on the token to prove that the token hasn’t been tampered with

- Verify that the user associated with the token is in our database

- [optionally] Retrieve a set of roles for this user

Simply checking the signature isn’t enough — that just tells us that the information in the token hasn’t been tampered with since it left the server, not that the owner is who they say they are; an attacker might have stolen the cookie or otherwise intercepted it. The second step give us some assurance that the user is valid; the database entry was created inside a Twitter OAuth callback, which means that the user had just authenticated with Twitter. The token itself is in a session cookie, meaning that it is not persisted on the client side (it is held in memory, not on disk) and that has the httpOnly flag set, which limits its visibility on the client.

In Express, we can create a middleware function that validates protected requests. Not all requests need such protection; there might be parts of the application that are open to non-logged-in users. A restricted-access POST request on the URI /db looks like this:

// POST Create a new user (only available to logged-in users) // router.post('/db', checkAuthorization, function (req, res, next) { ... }

In this route, checkAuthorization is a function that validates the JWT sent by the client:

const checkAuthorization = function (req, res, next) { // 1. See if there is a token on the request...if not, reject immediately // const userJWT = req.cookies.twitterAccessJwt if (!userJWT) { res.send(401, 'Invalid or missing authorization token') }

//2. There's a token; see if it is a valid one and retrieve the payload // else { const userJWTPayload = jwt.verify(userJWT, jwtConfig.jwtSecret) if (!userJWTPayload) { //Kill the token since it is invalid // res.clearCookie('twitterAccessJwt') res.send(401, 'Invalid or missing authorization token') } else {

//3. There's a valid token...see if it is one we have in the db as a logged-in user // User.findOne({'twitterAccessToken': userJWTPayload.twitterAccessToken}) .then(function (user) { if (!user) { res.send(401, 'User not currently logged in') } else { console.log('Valid user:', user.name) next() } }) } } }

Assuming that the authorization cookie exists (Step 1), it is then checked for a valid signature using the secret stored on the server (Step 2). jwt.verify returns the JWT payload object if the signature is valid, or null if it is not. A missing or invalid cookie or JWT results in a 401 (Not Authorized) response to the client, and in the case of an invalid JWT the cookie itself is deleted.

If steps 1 and 2 are valid, we check the database to see if we have a record of the access token carried on the JWT, using the Twitter access token as the key. If a record is present it is a good indication that the client is authorized, and the call to next() at the end of Step 3 passes control to the next function in the middleware chain, which is in this case the rest of the POST route.

Logging the user out

If the user explicitly logs out, a back-end route is called to do the work:

//This route logs the user out: //1. Delete the cookie //2. Delete the access key and secret from the user record in mongo // router.get('/logout', checkAuthorization, function (req, res, next) { const userJWT = req.cookies.twitterAccessJwt const userJWTPayload = jwt.verify(userJWT, jwtConfig.jwtSecret) res.clearCookie('twitterAccessJwt') User.findOneAndUpdate({twitterAccessToken: userJWTPayload.twitterAccessToken}, { twitterAccessToken: null, twitterAccessTokenSecret: null }, function (err, result) { if (err) { console.log(err) } else { console.log("Deleted access token for", result.name) } res.render('twitterAccount', {loggedIn: false}) }) })

We again check to see if the user is logged in, since we need the validated contents of the JWT in order to update the user’s database record.

If the user simply closes the browser tab without logging out, the session cookie containing the JWT will be removed on the client. On next access the JWT will not validate in checkAuthorization and the user will be directed to the login page; successful login will update the access token and associated secret in the database.

Comments

In no particular order…

Some services set short expiration times on access tokens, and provide a method to exchange a ‘refresh’ token for a new access token. In that case an extra step would be necessary in order to update the token stored on the session cookie. Since access to third-party services are handled on the server, this would be transparent to the client.

This application only has one role: a logged-in user. For apps that require several roles, they should be stored in the database and retrieved on each request.

An architecture question comes up in relation to checkAuthorization. The question is, who should be responsible for handling an invalid user? In practical terms, should checkAuthorization return a boolean that can be used by each protecte route? Having checkAuthorization handle invalid cases centralizes this behavior, but at the expense of losing flexibility in the routes. I’ve leaned both way on this…an unauthorized user is unauthorized, period, and so it makes sense to handle that function in checkAuthorization; however, there might be a use case in which a route passes back a subset of data for unauthenticated users, or adds an extra bit of information for authorized users. For this particular example the centralized version works fine, but you’ll want to evaluate the approach based on your won use cases.

The routes in this example simply render a Pug template that displays a user’s Twitter accoun information, and a flag (loggedIn) is used to show and hide UI components. A more complex app will need a cleaner way of letting the client know the status of a user.

A gist with sampe code is availabe at gist:bdb91ed5f7d87c5f79a74d3b4d978d3d

Composing the results of nested asynchronous calls in Javascript

Grokking asynchronicity

One of the toughest things to get your head around in Javascript is how to handle nested asynchronous calls, especially when a function depends on the result of a preceding one. I see this quite a bit in my software engineering course where teams are required to synthesize new information from two distinct third-party data sources. The pattern is to look something up that returns a collection, and then look something else up for each of the members in the collection.

There are multiple approaches to this problem, but most rely on Javascript Promises, either from a third-party library in ES5 and earlier or ES6's built-in Promises. In this article we'll use both by using ES6 native Promises and the async.js package. This isn't a discussion of ES6 Promises per se, but it might help you get to the point of understanding them.

The file discussed here is intended to be mounted on a route in a Node/Express application:

//From app.js const rp = require('./routes/request-promises') app.use('/rp', rp)

[Unfortunately the WordPress implementation used here does a horrific job displaying code, so the code snippets below are images for the bulk of the discussion. The complete file is available at the bottom of the post.]

The route here uses two APIs:

https://weathers.co for current weather in 3 cities, and

https://api.github.com/search/repositories?q=<term> to search GitHub repos for the 'hottest' city.

In our example we'll grab weather for 3 cities, pick the hottest one, then hit GitHub with a search for repos that have that city's name. It's a bit contrived but we just want to demonstrate using the results of one API call to populate a second when all of them are asynch.

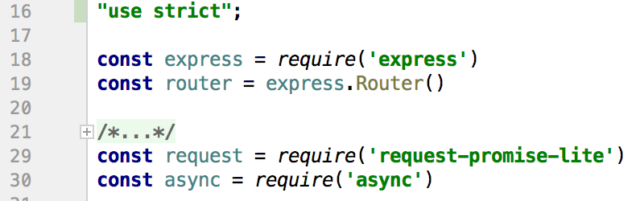

There are two packages (apart from Express) used:

The first is a reduced-size packaging of the standard request-promise package; we don't need all of the features of the larger library. request-promise itself is a wrapper that adds Promises to the standard request package used to make network calls, for example to an HTTP endpoint.

There's only one route in this module, a GET on the top-level URL. In the snippet above, this router is mounted at '/rp', and so the complete URL path is http://localhost:3000/rp (assuming the server is set to listen to port 3000).

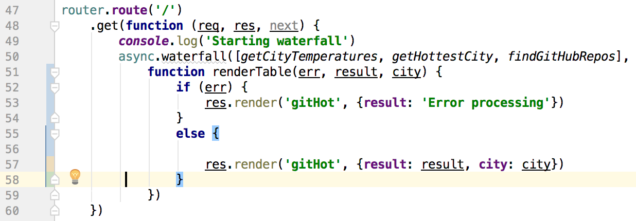

On line 50 the async.waterfall method is used to create a chain of functions that will execute in turn. Each function returns a Promise, and so the next function in line waits until the preceding function's Promise is resolved. We need this because each function provides data that the following function will use in some way. That data is provided to each function in turn along with a callback, which is the next function in the waterfall.

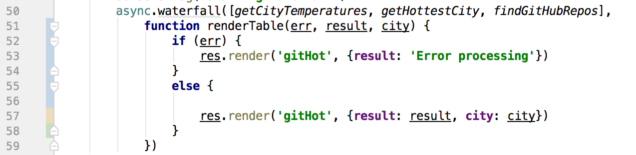

async.waterfall takes two parameters in this implementation: An array of functions to call in order, and a final function (renderTable on line 51) to execute last. A small side note: The final function can be anonymous, but when debugging a big application it can be tough to figure out exactly which anonymous function out of potentially hundreds is causing a problem. Naming those functions helps immensely when debugging!

getCityTemperatures

The first function in the waterfall, getCityTemperatures, calls the weathers.co API for each city in a hardcoded array. This is the first function in the waterfall and so it receives only one param, the callback (cb).

This is the most interesting function of the three because it has to do an API call for each city in the cities array, and they all are asynchronous calls. We can't return from the function until all of the city weather has been collected.

In each API call, once the current temperature is known it is plugged into the city's object in the cities array. (Note that weathers.co doesn't always return the actual current temperature. Often it is a cached value.) The technique here is to create a Promise that encompasses the API calls on line 83. This Promise represents the entire function, and it won't be resolved until all of the city weather has been collected.

A second Promise is set up in the local function getWeather on line 91, and that's the one that handles each individual city's API call. request.get() itself returns a Promise on line 92 (because

we are using the request-promise-lite package), and so we make the request.get() and follow it with a then() which will run when the API call returns. The resolve() at line 96 gets us out of this inner Promise and on to the next one.

Note that we don't yet execute getWeather(), we're just defining it here. Line 109 uses the Array.map method to return an array that has three functions in it. Each function has one of the city objects passed into it, and each returns a Promise (as defined on line 91). That cityPromises array looks like

[getWeather({name: 'Miami', temperature: null}), getWeather({name: 'Atlanta', temperature: null}), getWeather({name: 'Boston', temperature: null})]

after line 109 completes.

We still haven't executed anything. The mapping in line 109 sets us up to use the Promise.all( ) method from the ES6 native Promise library in line 121, which only resolves when all of the Promises in its input array have resolved. It's a bit like the async.waterfall( ) call from line 50, but in this case order doesn't matter. If any of the Promises reject, the entire Promise.all( ) structure rejects at that moment, even if there are outstanding unresolved Promises.

Once all of the Promises have resolved (meaning that each city's weather has been recorded, the then( ) in line 122 executes, and calls the passed-in callback with the now-complete array of cities and temperatures. The null value handed to the callback is interpreted as an error object.

Of interest here is that Promise.all( ) is, practically speaking, running the requests in parallel.

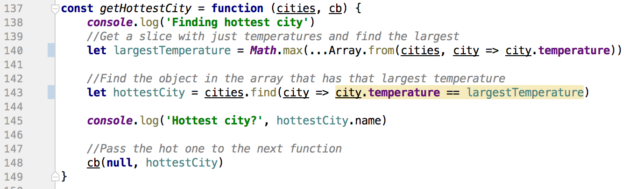

getHottestCity

The next function in the chain, getHottestCity, simply finds the largest temperature in the array of cities and returns that city's object to the next function in the waterfall. This is synchronous code and so doesn't require a Promise.

Line 140 creates a slice with just the temperatures from the cities array and calls Math.max( ) to determine the largest value. Line 143 then looks for the hottest temperature in the original cities array and returns the corresponding object. Both of these lines use the ES6 way of defining functions with fat-arrow notation (=>), and line 140 uses the new spread operator.

Once the 'hot' city has been determined it is passed to the next function in the waterfall in line 148. Here again, the null is in the place of an error object.

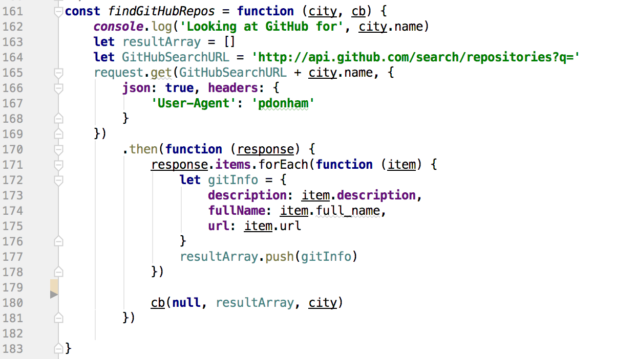

findGithubRepos

The next-to-last function in the waterfall searches Github for projects that include the name of the 'hot' city discovered earlier. The User-Agent header in line 167 is required by the Github search API -- you can change it to any string that you like.

The API call in line 165 uses request-promise (from line 29) and so returns a Promise. Once the Promise is resolved, the then( ) function builds an array of objects from some of the data returned.

In line 180 the completed array of items is passed to the next function in the waterfall along with the original 'hot' city object.

The final function

The last function to execute simply takes the results of our waterfall and renders a Pug page on line 57.

Here's a link to the complete file. If If you have questions or corrections, leave a comment!

Dogfooding: Defining roles in an MVC architecture with internal APIs

Here's a copy of the talk I did recently at Boston University discussing how to implement a clean MVC architecture for web apps, with a decoupled front end, using an internal API.

Abstract: The architectural design of an application often comes down to a single question: Where is the work done? Traditional client-server applications answer the question unequivocally: On the server. A new class of application, single-page (SPA), has blurred the separation of responsibility by moving data operations closer to the client. This talk discusses an approach that strictly segregates back-end models from front-end SPA views through use of an application-agnostic internal RESTful API, enhancing testability and re-use. While demonstration code will be in Javascript, the approach applies to most client-server application architectures.

Using Javascript Promises to synchronize asynchronous methods

The asynchronous, non-blocking Javascript runtime can be a real challenge for those of us who are used to writing in a synchronous style in languages such as Python of Java. Especially tough is when we need to do several inherently asynchronous things in a particular order...maybe a filter chain...in which the result of a preceding step is used in the next. The typical JS approach is to nest callbacks, but this leads to code that can be hard to maintain.

The following programs illustrate the problem and work toward a solution. In each, the leading comments describe the approach and any issues that it creates. The final solution can be used as a pattern to solve general synchronization problems in Javascript. The formatting options in the BU WordPress editor are a little limited, so you might want to cut and paste each example into your code editor for easier reading.

1. The problem

/*

If you are used to writing procedural code in a language like Python, Java, or C++,

you would expect this code to print step1, step2, step3, and so on. Because Javascript

is non-blocking, this isn't what happens at all. The HTTP requests take time to execute,

and so the JS runtime moves the call to a queue and just keeps going. Once all of the calls on the

main portion of the call stack are complete, an event loop visits each of the completed request()s

in the order they completed and executes their callbacks.

Starting demo

Finished demo

step3: UHub

step2: CNN

step1: KidPub

So what if we need to execute the requests in order, maybe to build up a result from each of them?

*/

var request = require('request');

var step1 = function (req1) {

request(req1, function (err, resp) {

console.log('step1: KidPub');

});

};

var step2 = function (req2) {

request(req2, function (err, resp) {

console.log('step2: CNN');

});

};

var step3 = function(req3) {

request(req3, function (err, resp) {

console.log('step3: UHub');

});

};

console.log('Starting demo');

step1('http://www.kidpub.com');

step2('http://www.cnn.com');

step3('http://universalhub.com');

console.log('Finished demo');

2. Callbacks work just fine, but...

/*

This is the classic way to synchronize things in Javascript using callbacks. When each

request completes, its callback is executed. The callback is still placed in the

event queue, which is why this code prints

Starting demo

Finished demo

step1: BU

step2: CNN

step3: UHub

There's nothing inherently wrong with this approach, however it can lead to what is

called 'callback hell' or the 'pyramid of doom' when the number of synchronized items grows too large.

*/

var request = require('request');

var req1 = 'http://www.bu.edu'; var req2 = 'http://www.cnn.com'; var req3 = 'http://universalhub.com';

var step1 = function () {

request(req1, function (err, resp) {

console.log('step1: BU');

request(req2, function (err, resp) {

console.log('step2: CNN');

request(req3, function(err,resp) {

console.log('step3: UHub');

})

})

});

};

console.log('Starting demo');

step1();

console.log('Finished demo');

3. Promises help solve the problem, but there's a gotcha to watch out for.

/*

One way to avoid callback hell is to use thenables (pronounced THEN-ables), which essentially

implement the callback in a separate function, as described in the Promise/A+ specification.

A Javascript Promise is a value that can be returned that will be filled in or completed at some

future time. Most libraries can be wrapped with a Promise interface, and many implement Promises

natively. In the code here we're using the request-promise library, which wraps the standard HTTP

request library with a Promise interface. The result is code that is much easier to read...an

event happens, THEN another event happes, THEN another and so on.

This might seem like a perfectly reasonable approach, chaining together

calls to external APIs in order to build up a final result.

The problem here is that a Promise is returned by the rp() call in each step...we are

effectively nesting Promises. The code below appears to work, since

it prints the steps on the console in the correct order. However, what's

really happening is that each rp() does NOT complete before moving on to

its console.log(). If we move the console.log() statements inside the callback for

the rp() you'll see them complete out of order, as I've done in step 2. Uncomment the

console.log() and you'll see how it starts to unravel.

*/

var rp = require('request-promise');

var Promise = require('bluebird');

var req1 = 'http://www.bu.edu'; var req2 = 'http://www.cnn.com'; var req3 = 'http://universalhub.com';

function doCalls(message) {

return new Promise(function(resolve, reject) {

resolve(1);

})

.then(function (result) {

rp(req1, function (err, resp) {

});

console.log('step:', result, ' BU ');

return ++result;

})

.then(function (result) {

rp(req2, function (err, resp) {

// console.log('step:', result, ' CNN');

});

console.log('step:', result, ' CNN');

return ++result;

}

)

.then(function (result) {

rp(req3, function (err, resp) {

});

console.log('step:', result, ' UHub');

return ++result;

})

.then(function (result) {

console.log('Ending demo at step ', result);

return;

})

}

doCalls('Starting calls')

.then(function (resolve, reject) {

console.log('Complete');

})

4. Using Promise.resolve to order nested asynchronous calls

/*

Here's the final approach. In this code, each step returns a Promise to the next step, and the

steps run in the expected order, since the promise isn't resolved until

the rp() and its callback are complete. Here we're just manipulating a function variable,

'step', in each step but one can imagine a use case in which each of the calls

would be building up a final result and perhaps storing intermediate data in a db. The

result variable passed into each proceeding then is the result of the rp(), which

would be the HTML page returned by the request.

The advantages of this over the traditional callback method are that it results

in code that's easier to read, and it also simplifies a stepwise process...each step

is very cleanly about whatever that step is intended to do, and then you move

on to the next step.

*/

var rp = require('request-promise');

var Promise = require('bluebird');

var req1 = 'http://www.bu.edu'; var req2 = 'http://www.cnn.com'; var req3 = 'http://universalhub.com';

function doCalls(message) {

var step = 0;

console.log(message);

return new Promise(function(resolve, reject) {

resolve(1);

})

.then(function (result) {

return Promise.resolve(

rp(req1, function (err, resp) {

console.log('step: ', ++step, ' BU ')

})

)

})

.then(function (result) {

return Promise.resolve(

rp(req2, function (err, resp) {

console.log('step: ', ++step, ' CNN');

})

)

}

)

.then(function (result) {

return Promise.resolve(

rp(req3, function (err, resp) {

console.log('step: ', ++step, ' UHub');

})

)

})

.then(function (result) {

console.log('Ending demo ');

return Promise.resolve(step);

})

}

doCalls('Starting calls')

.then(function (steps) {

console.log('Completed in step ', steps);

});