Moths Teach Drones to Fly

Research is first to apply animal data to autonomous vehicle navigation

By Liz Sheeley

When an autonomous drone is deployed for a mission, it flies on a specific, programmed route. But if there are any surprises along the way, the drone has a difficult time adapting to a change because it hasn’t been programmed on how to do that.

Now, researchers have been able to develop a new paradigm that would allow a drone to fly from point A to point B without a planned route, with the help of moths. Professor Ioannis Paschalidis (ECE, SE, BME), his team and collaborators at the University of Washington have been able to extract information about how a particular species of moth travels through a forest and then use that data to create a new control policy for drones. Their work has been published in PLoS Computational Biology and it is the first published work that uses data from an animal to improve autonomous drone navigation.

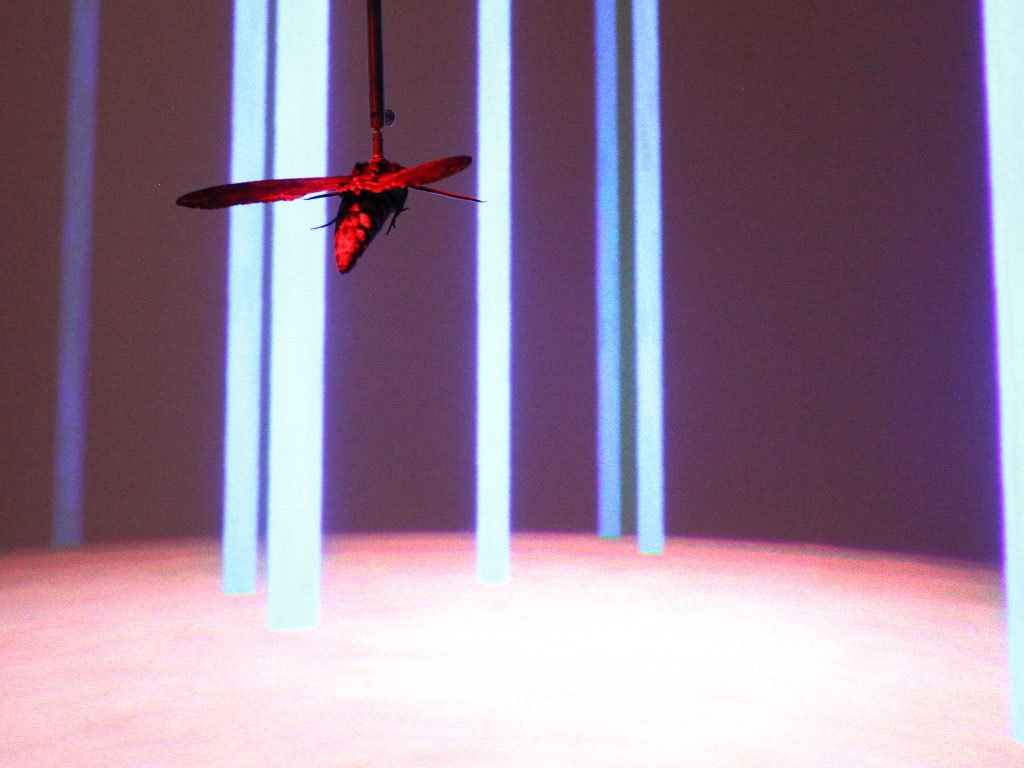

They first did this by having the researchers at the University of Washington collect data from how the moths would fly around a virtual forest, taking measurements about things like their flight trajectories, force and speed along the way. Those data were then used to extrapolate what kind of navigation policy the moths used.

“We discovered that the moths relied heavily on what is known as optical flow,” says Paschalidis. “That is a pattern of the motion of the various objects in the environment that is caused by your own motion relative to these objects, in this case the moth’s own motion.”

That perceived motion then allows the moth to react to objects in the appropriate way, as objects that are moving fast are close, and those that are further are moving slowly. This is similar to how humans react while driving; if a car suddenly appears extremely fast in front of your car, you’ll brake, but if a car is far away and speeding around, then you don’t have to react quite as quickly.

Understanding how the moths fly helped the researchers develop a drone policy that was then tested on virtual drones as they flew through a virtual forest. To see how robust this policy was, the researchers had the drones fly through multiple types of environments—from a complex, heavily wooded forest to a sparse one.

Paschalidis says the most surprising element of this study is that the policy helped the drone navigate through all of the different environments.

Typically, drone control policies are optimized for a specific mission, which renders them fragile to unexpected events. This research is getting one step closer to an adaptive, self-aware autonomous vehicle policy so it can fly in a dynamic, complex and unknown environment.

The work is part of a $7.5 million multidisciplinary university research initiative (MURI) grant awarded by the Department of Defense for developing neuro-inspired autonomous robots for land, sea and air.

Paschalidis says that collaborators from other universities will help gather data from other animals and insects such as mice and ants to develop more robust autonomous vehicle navigation policies.

Originally published on BU College of Engineering News on February 3, 2020.