Caffeine: The Good, The Bad, and The Ugly

Fatigue comes in all shapes and sizes, and sometimes it can appear in the case of the college student. Menacingly staring at the computer, eyes fixated on making sure the final paper meets the suggested word count, the college student desperately tries to block out the urge and addiction of distractions. Yet as the night sky soon turns bright with the rising sun, the college student’s attention shifts more and more from the task at hand, to the preparation of the pick-me-up beverage of choice, caffeine. With only minutes before the first morning class, the college student is faced with the harsh reality of selecting his weapon of choice. Will he run across the street, bracing the brutal winds to grab a caffe mocha with a double shot of espresso, or play it conservative, and go for it with the 5 hour energy shot or name brand energy drink?

Soda, coffee, and energy drinks are the three main drinks that come to mind when thinking about caffeine. But besides these drinks, caffeine has shown to be increasingly prevalent in different foods covering multiple food groups. While most people concede to the negative attention these beverages receive, caffeine is a three – headed monster that yields both positive and negative effects. Thus the real question should be, do you want the good or the bad news first?

Starting with the good: caffeine can increase your short-term memory and alertness while also altering your overall mood. The caffeine in one cup of coffee can stimulate the central system as it simultaneously lowers blood sugar, thus creating a temporary lift. Further research conducted by the Journal of Sports Medicine showed how “caffeine taken two hours before exercise enhanced the performance of athletes in marathon running.” Yet another study published in the Journal of the American Medical Associated indicated that “people who drink coffee on a regular basis have up to 80% lower risks of developing Parkinson’s disease.”

Starting with the good: caffeine can increase your short-term memory and alertness while also altering your overall mood. The caffeine in one cup of coffee can stimulate the central system as it simultaneously lowers blood sugar, thus creating a temporary lift. Further research conducted by the Journal of Sports Medicine showed how “caffeine taken two hours before exercise enhanced the performance of athletes in marathon running.” Yet another study published in the Journal of the American Medical Associated indicated that “people who drink coffee on a regular basis have up to 80% lower risks of developing Parkinson’s disease.”

On the contrary, caffeine does have a dark side. Caffeinated foods can contribute to a person’s struggle with either weight gain or hunger. The stimulant itself is known to increase appetite, to increase cortisol levels, and to increase levels of insulin. Any of these factors may combine with a caffeine-induced stress that often affects the results of dieters, being that caffeine is a natural diuretic which can lead to water retention. Caffeinism, as it is often referred to, can come in waves of migraine headaches and sickness, which in turn can cause nervousness and a rapid heartbeat. So does this mean that you shouldn’t have a cup of coffee in the morning? My response is no.

Ahhh coffee, such a misunderstood luxury. Caffeine, within coffee beans, has shown to be a leading source of both brain and body health benefits, specifically playing the role of your average American’s number one source of antioxidants. In fact, regular coffee consumption has shown to dramatically reduce the chance of mental heath risks including Alzheimer’s and Dementia. However, coffee is one of those things that is always looked down upon as if it induces the same affects as alcohol. The real problem lies not with the coffee, but with all the other unhealthy ingredients that it can be mixed with. For example, which sounds healthier, a strait shot of Espresso or a Cinnamon-Dulce-White-Mocha-Frappucino, cream based. Now when you compare the carb, chemical, and fat information of the two with the purity of the first, the controversy over coffee is plain and simple.

In essence, caffeine is one of those things that must be taken in moderation. While caffeine contains both positive and negative extremes, a balanced consumption of caffeine through artificial drinks or coffee in its purest form, seems to be just fine, especially with the college students.

Caffeine – K. Cossaboon

Foods Containing Caffeine – Ella Rain

Brain Healthy Foods – Brain Ready

Moral Code

Why is it wrong to kill babies? Why is it wrong to take advantage of mentally retarded people? To lie with the intention of cheating someone? To steal, especially from poor people? Is it possible that Medieval European society was wrong to burn women suspected of witchcraft? Or did they save mankind from impending doom by doing so? Is it wrong to kick rocks when you’re in a bad mood?

Questions of right and wrong, such as these, have for millenia been answered by religious authorities who refer to the Bible for guidance. While the vast majority of people still turn to Abrahamic religious texts for moral guidance, there are some other options for developing a moral code. Bibles aside, we can use our “natural” sense of what’s right and wrong to guide our actions; a code based on the natural sense would come from empirical studies on what most people consider to be right or wrong. Ignoring the logistics of creating such as code, we should note that the rules in this code would not have any reasoning behind them other than “we should do this because this is what comes naturally.” How does that sound? Pretty stupid.

The other option is to develop a moral code based on some subjective metaphysical ideas, with a heavy backing of empirical facts. “Subjective” means these ideas won’t have an undeniability to them; they are what they are and that’s it. Take as an example the rule such as “we should not kill babies.” There is no objective, scientific reason why we shouldn’t kill babies. Wait!, you say, killing babies is wrong because it harms the proliferation of our species and inflicts pain on the mothers and the babies themselves! But why should we care about the proliferation of our species? About hurting some mother or her baby? While no one will deny that we should care about these, there is nothing scientific that will explain why. Science may give us a neurological reason why we care about species proliferation (it will go something like, “there is a brain region that makes us care about proliferation of our species.”), but why should we be limited to what our brains tend to make us think or do?

Subjective rules like these must therefore be agreed upon with the understanding that they are subject to change. Interestingly, some argue that science can answer moral questions because it can show us what “well-being” is, how we can get it, etc. But the scientific reason why we should care about well-being is nowhere to be found. The result is that we can use science to answer moral questions, but we have to first agree (subjectively) that we want well-being. Science by itself cannot answer moral questions because it shows us what is rather than what ought to be. (Actually, Sam Harris is the only one to argue that science can be an authority on moral issues; his technical faux-pas is an embarrassment to those who advocate “reason” in conduct).

But more on the idea of metaphysically constructed moral codes. What properties should this code have, and how should we go about synthesizing it? Having one fixed/rigid source as an authority for moral guidance is dangerous. Make no mistake: there must be some authority on moral questions, but it must be flexible, and adaptable; it must be able to stand the test of time on the one hand, but to be able to adjust to novel conditions on the other. This sounds a lot like the constitution of the U.S. But even with such a document as The Constitution, which has provided unity and civil progress since the country’s founding, there are some who take its words literally and allow no further interpretation; if it’s not written in the constitution, it can’t be in the law, they argue (see Strict Constructionism versus Judicial Activism). These folks also tend to be rather religious (read: they spend a lot of time listening to stories from the Bible; not to be confused with “spiritual” or of religions other than the Abrahamic ones). So while we must have a moral code, it must be flexible (i.e. change with time) and we must seek a balance between literal and imaginative interpretations, just as we do with the US Constitution.

Why and how is a rigid moral authority dangerous? Our authority must change with time because new developments in our understanding of the world must update how we interact with others. For example, if science finds tomorrow that most animals have a brain part that allows them to feel emotional pain in the same way that humans do, we will have to treat them with more empathy; research on dolphin cognition has recently produced an effort by scientists to have dolphins be considered and treated as nonhuman persons. Furthermore, if we don’t explain why we do certain things, we won’t understand why we do them and therefore won’t know why violating them is bad. This unquestionability aspect of God as moral authority or the Strict Constructionists as law-makers is what makes them particularly dangerous and leads to prejudice and ignorance. Our moral code must therefore be based on empirical research, with every rule being subject to intense scrutiny (think of two-year-olds who keep asking, “but why?”).

But why should we have a moral code in the first place? Perhaps if everyone followed a moral code of some sort, the world would have fewer injustices and atrocities. Getting people to follow a moral code of any kind is a completely different issue.

Nonhuman Personhood for Dolphins

Are you and your significant other meant to be?

Well, no one truly knows the answer to that question until they're looking back on their life and reminiscing about the time they spent with their partner. However, a new theory suggests that certain subtleties in language style can determine compatibility between two people. This includes speaking as well as personal writing styles, from Facebook chat to an essay sample.

Researchers have postulated that the use of common words called "function words", including 'me', 'a', 'and', 'but', as well as a number of other prepositions, pronouns, adverbs, etc. can at least estimate the compatibility of a couple. These researchers have devised an equation using the basic-level function words to determine "language style matching" (LSM). A higher LSM means more compatible writing styles, and ergo, a more compatible couple.

A study that analyzed the writing styles of online chats of various couples over the course of ten days revealed much about this theory. According to an article about this study in The Daily Telegraph, "almost 80 percent of the couples whose writing style matched were still dating three months later, compared with approximately 54 percent of the couples who did not match as well."

An online LSM generator has been created by this team of researchers. You can go to this site and insert various writing samples from IM chats to poetry. But this is not solely to determine compatibility in a relationship; you are able to compare writing styles of strangers, friends, and even two of your own pieces. I've tried it and find it to be intriguing at least. In no way would I assert that this is a completely accurate way to determine personality similarity, but it seems to me that it has some logic to it and is not as absurd as I had originally expected.

Language Style Matching Predicts Relationship Initiation and Stability- Association for Psychological Science

Scientists find true language of love - The Telegraph

The Tell-Tale Brain from the neurObama

I began writing this post with feelings of guilt and inner turmoil because the article came out just one week too late - apparently V.S. Ramachandran was scheduled to speak about and discuss his new book The Tell Tale Brain: A Neuroscientist’s Quest for What Makes Us Human in Cambridge at the Harvard Book Store on February 2nd. If you haven't heard this man's name thrown around in any of your neuroscience classes, you have most definitely been asleep. As an engaged and involved neuro-nerd, I felt like a huge ass not only for missing this event, but also for not alerting my fellow blog nerds! But the reality of Boston and global climate change lead to this event being canceled. Upon inquiry I was told that they are trying to reschedule this talk, which would be wonderful, and I will be sure to give a shout-out to the internet crowd if I hear about a new date to see this incredibly influential man in our area.

The main point, though, is not the event, but the brand new book released by this professor/author/neurologist Vilayanur S. Ramachandran. Currently working as a professor in the Psychology Department and Neurosciences Program at the University of California, San Diego, this man has captured the attention of the neuroscience world for many years and perhaps even more importantly, the attention of those outside the field. His research on oddities such as phantom limbs and synesthesia has vastly contributed to our understanding of the normal and abnormal brain. His previous books, Phantoms in the Brain: Probing the Mysteries of the Human Mind and A Brief Tour of Human Consciousness: From Impostor Poodles to Purple Numbers are user-friendly guides to the mind and brain, and have bolstered his research to make Ramachandran a neuro-celebrity.

His new work The Tell-Tale Brain is a 357 page sweep through the structure of the brain, what can happen when it goes wrong, and why we are the way we are when it goes right. He presents the human brain "anatomically, evolutionarily, psychologically, and philosophically" to discover what about our brains creates the human experience. This follows his approach, as he states in the epilogue of Tell-Tale Brain: "One of the major themes in the book - whether talking about body image, mirror neurons, language evolution, or autism - has been the question of how your inner self interacts with the world (including the social world) while at the same time maintaining its privacy. The curious reciprocity between self and others is especially well developed in humans and probably exists only in rudimentary form in the great apes. I have suggested that many types of mental illness may result from derangements in this equilibrium. Understanding such disorders may pave the way not only for solving the abstract (or should I say philosophical) problem of the self at a theoretical level, but also for treating mental illness." I would say that this richly multifaceted approach seems ambitious, but reviews say Ramachandran's book is still a comprehensive and satisfying read.

In The Tell Tale Brain Ramachandran elaborates on his older work on synethseia and phantom limbs with new research. With additional empirical research and case studies he builds the story of the cognitive and physical processes behind Capgras Syndrome, when your own mother or poodle becomes an impostor, Cotard's Syndrome, when you believe that you are dead, and many other rarities. But along with the oddities he also contemplates the evolutionary significance to our normal everyday actions. For example he offers the "peekaboo principle" as a potential explanation for our seemingly universal draw to puzzles, concealment, and partial nudity. I will let you read into that one on your own. He also relates our desire to color-match clothing and accessories to "the experiences of our ancestors when they spotted a lion in the undergrowth by realizing that those yellow patches in between the leaves are parts of a single dangerous object." Speculation such as this leaves some skeptical.

The main thesis of this piece, though, seems to be the infamous Mirror Neuron and its astronomical influence on human evolution. He believes mirror neurons may be the key to the emergence of culture and language, and essentially, the distinctive human experience. As if the mirror neuron hype wasn't wild enough, it is about to be taken to a whole different level.

The New York Times Book Review states that some readers may lose track of what is firmly established in research and literature, and what is tentative speculation. This worries me, especially if Ramachandran is aiming at a generally less informed audience. It is easy for something that is an "interesting idea" to turn into a cultural fact if it is passed around and exaggerated enough by people who are not prepared to look to the research - or lack thereof.

I have yet to read this book but I thought it important to give everyone the heads up that this book is something that will be around - you will hear reviews tossed around amongst your classmates, in Paul Lipton's office, and, if Ramachandran is really making neuroscience as accessible as he hopes, random people on the T. I personally hope that the book does not rely too much on the "shocking" stories we have all come to know, as I feel like some major and important topics in neuroscience can be turned into gimmicks. I have already heard completely unknowing hipsters act really cool by spitting out entirely incorrect information about mirror neurons to "blow the minds" of their friends, so I hope that Ramachandran's postulations do not add to the vortex that is the obnoxious overconfidence of the only partially informed.

If any of you have read this new book please leave a review/comment and share your take with us!

Book Review- The Tell-Tale Brain- By V.S. Ramachandran - NYTimes.com

The Tell-Tale Brain: A Neuroscientists Quest for What Makes Us Human - BrainPickings.org

Vilayanur S. Ramachandran - Wikipedia.org

Just Keep Swimming…

Finding Nemo's Marlin

In Disney/Pixar's "Finding Nemo," Marlin and Dory are swimming through murky waters en route to Sydney Harbor. Marlin suddenly exclaims, "Wait, I have definitely seen this floating speck before. That means we've passed it before and that means we're going in circles and that means we're not going straight!" - and he is probably right.

Is it really possible that when we cannot see where we are going, we actually travel in circles? Souman et al. tested this belief through a variety of experiments. They found in all cases that when deprived of a visual stimulus, it is actually impossible to travel in a straight line.

The first set of experiments had participants travel through a wood without visual impediments (such as blindfolds). One set of subjects traveled through the woods when it was cloudy, the second set when it was sunny. All of the cloudy group walked in circles and walked in areas that they had previously been, without noticing they had crossed a previous path. In contrast, all of the subjects who could see the sun were able to maintain a course that was relatively straight and had no circles.

The experiment was also performed on blindfolded subjects in an open field.

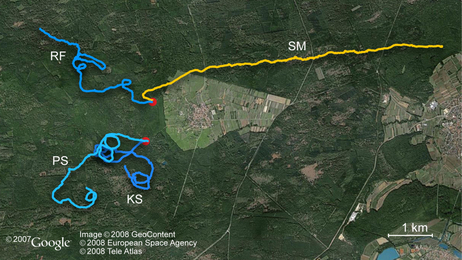

Paths of Blindfolded Subjects

The blue paths correspond to the subjects that walked on cloudy days. Their paths are mostly curved with many circles. The small straight areas of walking are most likely caused by the setup of the trial - participants walked for a period of time, then were unblindfolded and allowed to walk to the starting point of the next walking block. Even so, when blindfolded, lack of a visual stimulus when blindfolded always resulted in walking in curved motions or in circles. This contrasts the yellow path; this subject walked on a sunny day, and maintained a straight course for a long distance.

What causes this strange phenomenon? Could it perhaps be subtle differences in leg length that introduce a bias to walk in one direction, thus accounting for the circular motion? Nope - the circle directions were still random. Adding shoe soles to add a more than subtle difference in leg length didn't make a difference: the participants continued to walk in random circles.

Perhaps the only explanation is that our vision is so necessary for our daily lives that our body randomizes without it. This idea is demonstrated in studies in which subjects are kept in a room with constant lighting: their biological clocks become completely randomized with no night and day inputs. More studies should be performed to truly understand the importance of the visual system. Since we rely so heavily on vision, is it natural for movements to become randomized without it? Do those who are blind from birth experience the same walking in circles phenomenon? For now, the conclusion here is that the sensory systems are complex and there is still much work to be done in understanding this strange phenomenon. So, if you ever find yourself lost in murky Australian waters, you probably should not just keep swimming, but rather, ask a friendly passing whale for directions.

A Mystery: Why Can't We Walk Straight? : Krulwich Wonders... - NPR

Walking Straight into Circles - Current Biology

Memory 101: Understanding How We Remember

Do you ever wonder how you are able to remember the name of your third-grade teacher, or the skills you use to ride a bike, or even lines from your favorite movie? Well, if you haven't then you should, because it takes the workings of many regions of our brain to combine all the different aspects of one memory into a cohesive unit.

The first step in this complex process deals with our perceptions and senses. Think about the last time you visited the beach. Recall the sound of the wind and birds, the sight of the sun and ocean, the smell of the salt water and the feeling of the hot sand and shells underfoot. Your brain merges all of these different perceptions together, crafting them into the "memory" that we are able to recall.

All of these separate sensations travel to the part of our brain called the hippocampus. Along with the frontal cortex, the hippocampus plays a huge part in our memory system. These two regions decide what is worth remembering and then store this information throughout the brain.

Perception starts the processes leading up to encoding and storage, which takes place through our brains' synapses (or the gaps between neurons). Through these synapses, neurons are able to electrically and chemically transmit information between themselves. When an electric pulse is fired across the gap, it triggers the release of chemical messengers called neurotransmitters.

Here is a clear view of communication between neurons through the releasing of neurotransmitters over the synapse.

From there, the spread of information begins. The neurotransmitters diffuse to neighboring cells and attach to them, forming thousands of links. All of these cells process and organize the information as a network. Similar areas of information are connected and are constantly being reorganized as our brain processes more and more.

Changes are reinforced with use. So let's say you are learning to play a sport. The more you practice, the stronger the rewiring and connections will become, thus allowing the brain to do less work as the initiation of pulses becomes easier with repetitive firing. This is how you get better at a certain task and are able to perform at a higher level without making as many mistakes. But again, because our brain never stops the process of input and output, practice needs to be constant in order to promote strong information retention.

Knowing all of this, it probably comes as no surprise that the most basic function for ensuring proper memory encoding is to pay specific attention to what you are doing. We are exposed to thousands of things in very short amounts of time, so the majority of it is ignored. If we pay more attention to select, specific bits of information, we'll have a higher potential to remember certain things (try it out for yourself in lecture).

Since the actual process has been discussed, we'll go into greater detail about the types of memory we have and how they differ. There are three basic memory types that act as a filter systems for what we find important. This is based on what we need to know and for how long we need to know it.

The first is sensory memory, which is basically ultra-short-term memory. It is based off of input from the five senses and usually lasts a few seconds or so. An example would be looking at a car that passes by and remembering what color it was based on that split second intake. The effect is vaguely lingering, and is forgotten almost instantly.

Short-term memory is the next category. People sometimes refer to it as "the brain's Post-it note". It has the ability to retain around seven items of information for about less than a minute. Some examples would include telephone numbers or even a sentence that you quickly glance over (such as this one). You have to remember what is being said at the beginning to understand the context. Likewise, numbers are usually better remembered, and have longer staying power in the brain, when split up (800-493-2751 instead of 8004932751 for instance).

Repetition and conscious effort to retain information leads to the transformation of short-term memory into long-term memory. By rehearsing information without interference or disturbances, one is better able to remember things and ingrain them into his/her brain. This is a gradual process, but it proves why studying is important! Unlike the other two memory categories, long-term memory has the ability to retain unlimited amounts of information for a seemingly indefinite amount of time.

This diagram shows a more complex view of the major memory types and their subdivisions.

A piece of information must pass from both sensory and short-term memory to successfully be encoded in long-term memory. Failure to do so generally leads to the phenomenon known as "forgetting", something that many of us are all too familiar with ironically enough!

To give a common example of long-term encoding and memory retrieval, consider trying to recall where you have put your keys down. First, you must register where you are putting your keys and attention while putting them down so that you can remember later. Accomplishing all of this helps a memory to be stored, retained, and ready for retrieval when necessary.

Forgetting may deal with distraction, or simply just failure to properly retrieve a memory. That being said, it should be noted that there is no predisposition to having a "good" or a "bad" memories. Most people are good at remembering certain things (numbers, procedures and mechanisms for example) better than others (names, phrases, or even entire plays) and vice versa. It all depends on where you are able to focus your interests and your attention.

Hopefully, you will be able to remember some of this so that you can use your understanding of the complexities of the brain and memory encoding to your advantage. After all, your brain does all the hard work for you! Now you just need to pay attention and focus on what you find important and what you want to remember to best suit your own needs.

How Human Memory Works - Discovery Health

Types of Memory - The Human Memory

How Does Human Memory Work? - USATODAY.com

Zombies, brains, and media, oh my!

Zombies attack! – well, the media, anyway. From movies and television shows (this past Sunday on Glee!) to books and conventions, zombies are taking over.  Last year, “Seattle, the self-proclaimed zombie capital of the world, was host to ZomBcon, the first ever Zombie Culture Convention, over Halloween weekend at the Seattle Center Exhibition Hall.

Last year, “Seattle, the self-proclaimed zombie capital of the world, was host to ZomBcon, the first ever Zombie Culture Convention, over Halloween weekend at the Seattle Center Exhibition Hall.

A “symbolic tribute” to zombie film director, George A. Romero, the event allowed fans to gather over three days to “celebrate all things zombie.” The creator, Ryan Reiter, also organized a Red, White & Dead Zombie Walk for Independence Day (Guinness Book World Record for “largest gathering of zombies” with 4200... New BU Initiative: Beat this Guinness Book World Record?)

Back to zombies though – on April 13th, 2009, Dr. Steven Schlozman , a Harvard psychiatrist, went to the Coolidge Corner Theater (close by in Brookline!) to discuss and answer questions about the neurobiology of zombies before a screening of Night of the Living Dead.

Having studied zombie films and literature as well as consulting with the director Romero noted above, Dr. Scholzman is quite informed in zombie-ology. Amazingly, the enthusiastic response from th e moviegoers inspired him to go viral and, according to Rebecca Jacobson on PBS, “take the lecture nationwide.”

e moviegoers inspired him to go viral and, according to Rebecca Jacobson on PBS, “take the lecture nationwide.”

Ira Flatow of National Public Radio had an interview with him in October 2009, in which of Dr. Scholzman, he says his fake research aids attempts to “see if there could be…a vaccine to protect us from … zombies, just in case.” Later, when asked by a caller about the different kinds of zombies portrayed in movies, he distinguished between the typical, slow Romero-type of zombie and the fast, more sophisticated “28 Days later” type that can exhibit hunting and other pack behaviors – “neurobiologically speaking, they got to be different.”

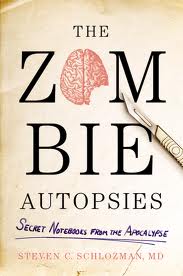

He has actually also written a popular science book, The Zombie Autopsies: Secret Notebooks from the Apocalypse. With 208 pages of zombie-brain-apocalyptic fun, his book will be released March 25th, 2011 for as little as $11.25 on Amazon. (Check out his zombieautopsies twitter for more on the book and his theories!)

Rebecca Jacobson notes that Dr. Scholzman is using a very straightforward, fun, teaching approach to help his students apply neuroscience to new situations. Dr. Scholzman believes adults “learn better when they can apply new knowledge to something familiar, before going on to tackle more complex neuropsychiatric cases.”

Teaching science in new ways is very valuable to Doron Weber of the Sloan Foundation, which has “has promoted filmmakers writing screenplays and making movies with science themes.” He says, "science and technology are woven into the fabric of our lives, but people all too often see it as something exotic or unusual. What we are doing here is bringing the real person, a scientist, so people can see that science is a very human activity."

The Coolidge Corner Theater tries to bring science to the public by holding Science on Screen events, tickets only $7.75 for students. This February 21st, the film Death in Venice will be screened following a discussion with Nancy Etcoff, who is a psychologist on the faculty at Harvard Medical School. She wrote Survival of the Prettiest: The Science of Beauty. Just a month later, on March 21st, Transcendent Man will be screened and discussed with both Ray Kurzweil, “one of the world’s leading futurists,” and director Barry Ptolemy. Go to http://www.coolidge.org/science for more information!

Back to brains though! In Dr. Scholzman’s fictional, futuristic papers on the neurobiology of zombies, he writes that zombies suffer from ANSDS (Ataxic Neurodegenerative Satiety Deficiency Syndrome). He strives to explain the hunger, rage, movement, and so forth of zombies. Interestingly, he says that zombies can only be fueled by rage, like crocodiles, and hence, have highly active amygdalae. Because of this, he originally named their syndrome RAH (Reptilian Aggression Hunger Syndrome), but that was before the fictional International  Classification of Disease decided to change it to ANSDS in “2012”… find out more, including his theory on how zombies always eat but never..you know excrete, here!

Classification of Disease decided to change it to ANSDS in “2012”… find out more, including his theory on how zombies always eat but never..you know excrete, here!

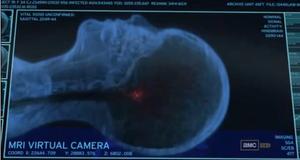

While Dr. Scholzman is not the only person to try to explain the neuroscience of zombies, he does it very well and has been received enthusiastically by many. Interestingly though, the new hit TV series, The  Walking Dead, attempts describing what happens to the brain after infection and into resurrection. Watch this Youtube clip to see Dr. Edwin Jenner use MRI virtual camera to show the other characters what he has learned of zombies! How does his explanation line up with Dr. Scholzman’s? What’s your theory?

Walking Dead, attempts describing what happens to the brain after infection and into resurrection. Watch this Youtube clip to see Dr. Edwin Jenner use MRI virtual camera to show the other characters what he has learned of zombies! How does his explanation line up with Dr. Scholzman’s? What’s your theory?

Sources:

A Head-Shrinker Studies The Zombie Brain – Ira Flatow, National Public Radio

ZomBcon 2010: Seattle Hosts First Ever Zombie Convention – Timothy Lemke, Yahoo! Games, Plugged in

A Harvard Psychiatrist Explains Zombie Neurobiology – Mark Strauss, io9 forum

What Zombies Can Teach Us About Brains – Rebecca Jacobson, PBS

Silver Screen Scientists Unleashed – Dan Vergano, USA Today

Reality Television's Pica Craze

It seems that TLC’s latest tactic to garner higher ratings is by the exploitation of those suffering from pica, a disease where people feel the compulsion to eat things that are not food.

See a video here: Kesha has an "addiction" to eating toilet paper!

“My Strange Addiction” features a number of people with obsessions ranging from odd to dangerous, and a good third of the participants this season are clearly, to anyone who has taken an Abnormal Psychology class, suffering from pica. So far this season, people consumed toilet paper, household cleanser, detergent and soap, couch cushions, and glass. On a recent episode a man even swallowed bullets.

Pica is a serious condition. It can lead to the abnormal ingestion of dangerous chemicals, nutritional deficiencies, blockages in the digestive tract, tears in the intestines, and infections.

One featured addict, Crystal, ingested the household cleaner Comet for 30 years. After an inevitable trip to the dentist, she was informed that the majority of her teeth had eroded-- even at the root. Crystal’s dentist said her condition was the equivalent to a “meth mouth.” Restoring her teeth took months of dental surgery totaling over $20,000.

Pica is typically a childhood occurrence usually lasting a few months, but those featured on this show are adults and they clearly need help. The producers of “My Strange Addiction” claim to help those featured on the show seek help. Every participant is encouraged to attend intensive therapy because behavioral counseling is the most widely used treatment for this incurable disease.

Staying in therapy after the filming of the show ends is not obligatory. At the end of Episode 3, Crystal’s chances of remaining in therapy seemed slim. Her disorder stems from her difficult childhood that started with sexual abuse. Her problem needs serious attention, and should not to be featured as some sort of novelty on a reality TV show.

If “My Strange Addiction’s” producers worked to ensure that the sufferers on the show committed themselves to a therapy plan, it might be acceptable to have a show of this nature. The way the program is currently set up, those involved with “My Strange Addiction’s” production are no longer simply innocent bystanders. There may be no law saying that when you see someone in trouble you are supposed to help them, but this is not a political issue; it’s a moral one. The creators of this program are morally obligated to help these ill individuals instead of solely using them to make money.

Works Cited:

My Strange Addiction Focuses on Unusual Obsessive Behavior - ABC News

Mental Health and Pica - WebMD

Dentist Helps Metro Detroit Woman Addicted to Comet Cleaner - Fox

A Real Life Terminator?

In the 1984 film The Terminator, an artificial intelligence machine is sent back in time from 2029 to 1984 to exterminate a woman named Sarah Connor. The Terminator had not only a metal skeleton, but also an external layer of living tissue as well, and was thus deemed a cyborg, a being with both biological and artificial parts. In 1984, no such cyborgs existed in the real world. However, fourteen years later, that would change.

Kevin Warwick is a Professor of Cyberkinetics at the University of Reading in England, and in 1998, he became the world’s first cyborg. Using only local anesthetic, a small silicon chip transponder was implanted into his forearm. The chip had a unique frequency that was able to track him throughout his workplace, and with a clench of his fist, he was able to turn lights on and off, as well as operate doors, heaters, and computers.

To take the experiment to the next level, in 2002 Warwick received another implant. A one hundred electrode array was implanted into the median nerve fibers of Warwick’s left forearm. With this implant, he was able to control electric wheelchairs and a mechanical arm. The neural signals being used to control the arm were detailed enough that the mechanical arm was able to mimic Warwick’s arm perfectly. While traveling to Columbia University in New York, Warwick was even able to control the mechanical arm from overseas and get sensory feedback transmitted from the arm’s fingertips (the electrode array could also be used for stimulation).

Although Warwick’s work could profoundly affect the world of medicine through its potential to aid those who have nervous system damage, his work has been considered quite controversial. After his first implant, Warwick announced that his enhancement made him a cyborg. However, questions are being asked, "when does a cyborg become a robot?" If these types of implants become more common in the future, how would the population feel about these “enhanced” individuals? In the future, it is possible that these implants could be used for anything from carrying a travel Visa to storing our medical records, blood type, and allergies in case of medical emergencies. Warwick is proud of his work because he is pioneering how humans can be integrated with computerized systems, but he has his own concerns as well. In one interview, he claims that it is a realistic possibility that one day, humans will create such intelligent artificial beings that it is possible we won’t be able to turn them off. Will cyberkinetic research ever take us that far? We will just have to wait and see.

For more information of the work of Kevin Warwick, visit his website.

Neuro_________

Neuroscience has transformed itself into a highly interdisciplinary science rooted in biology that integrates psychology, chemistry, physics, computer science, philosophy, math, engineering, and just about every other type of science that we know of. Botany? Synthesis of new drugs. Oceanography? Animal models. I’m sure there must be some science that neuroscience hasn’t touched, but I can’t think of one.

Since its inception, neuroscience has gotten its grubby little fingers all over the science community. Now, it’s not clear whether the recent flood into even more fields is the result of ambitious forward thinkers or crafty businessmen, but either way, “neuro” as a prefix is popping up everywhere. While some of these up-and-coming fields show promise or, at least, raise interesting questions, others are less convincing…

Neuromarketing: Studies consumers’ sensorimotor, cognitive, and affective response to marketing stimuli

How: fMRI, EEG, eye tracking and GSR (Galvanic Skin Response)

Why: Provides information on what a consumer reacts to. (For example, is it the color, sound, or feel or a product that drew you to it?)

Does it hold water? The neuromarketing firms say so, but there’s minimal peer-reviewed published data to support their claims

Neuroeconomics: Studies decision making under risk and uncertainty, inter-temporal choices (decision that have costs and benefits over time), and social decision making

How: fMRI, PET, EEG, MEG, recordings of ERP and neurotransmitter concentrations, in addition to behavioral data over various design parameters

Why: Some facets of economic behaviors are not completely explained by mainstream economics (expected utility, rational agents) or behavioral economics (heuristics, framing)

Does it hold water? The field has emerged as a respectable one, with centers for neuroeconomic study at many highly regarded universities

Neurolaw: Integrates neuroscientific data with the proceedings of the legal system

How: MRI, fMRI, PET

Why: Supporters believe reliable neuroscientific data can elucidate the truth behind human actions

Does it hold water? TBD. Lie detectors and predictive measures are not yet refined enough to make any real claims. However, the insanity defense (in which a defendant can claim that a neurological illness robbed him or her of the capacity to control his or her behavior) can clear defendants of criminal liability. In addition, the ethics of sentencing and lawmaking with neuroscientific data falls in a grey area.

Neuropolitics: Studies the neurological effects of political messages as well as the characteristics of political people

How: fMRI, GSR

Why: To asses the physical effect of political messages . Also, are liberals and conservatives mechanically different?

Does it hold water? Probably not, but everyone likes a little neuro-babble.

I’m sure this is only the beginning of the neuro______ revolution.

Brain Scans as Mind Readers? Don't Believe the Hype. - Wired.com

The Brain on the Stand - NYTimes.com

Why is every neuropundit such a raging liberal? - Slate.com

Neuroeconomics - Wikipedia

Neuromarketing - Wikipedia