Research

Systems for Secure Computation

Research in the cryptography community has shown that any function can be securely computed by a group of participants in a distributed fashion such that each party learns the function’s output and nothing more. This fascinating idea started as a theoretical curiosity in the 1980s but since then it has evolved into a useful tool to realize use cases like the gender wage gap study in the Boston area. A series of recent theoretical advances in the field have further supported the belief that provably secure computation can perhaps become as practical and ubiquitous as public-key cryptography. Some of these advances include efficient multi-party computation protocols, fully homomorphic encryption schemes, zero-knowledge proofs, and oblivious RAM. Supporting end-to-end secure computation with practical performance requires rethinking the entire systems stack: from the programming abstractions all the way down to the hardware. Below are two recent results of our research in this area.

Secure relational analytics

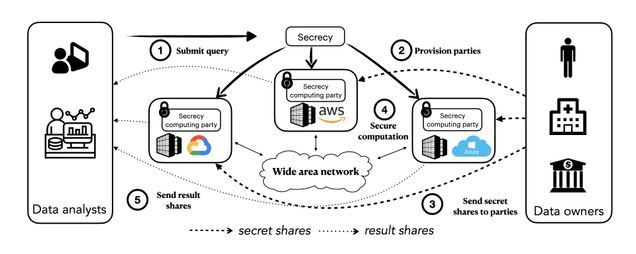

SECRECY (NSDI’23) is a novel system for privacy-preserving collaborative analytics as a service. SECRECY allows multiple data holders to contribute their data towards a joint analysis in the cloud, while keeping the data siloed even from the cloud providers. At the same time, it enables cloud providers to offer their services to clients who would have otherwise refused to perform a computation altogether or insisted that it be done on private infrastructure. SECRECY ensures no information leakage and provides provable security guarantees by employing cryptographically secure Multi-Party Computation (MPC). SECRECY’s core novelty is a generic cost-based optimization framework for relational MPC that does not rely on trusted infrastructure.

Read our paper for more details.

Secure time series analytics

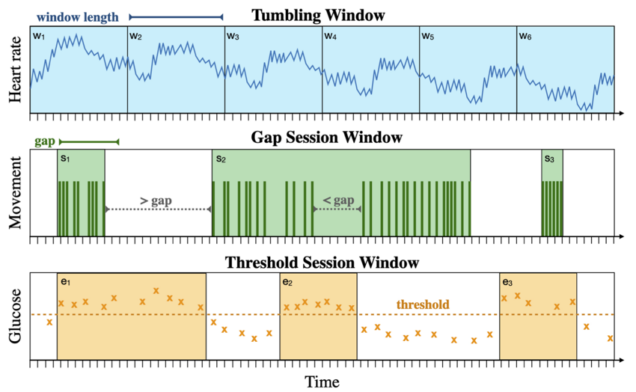

TVA (Security’23) is a novel system for secure and expressive time series analysis under MPC. Similarly to SECRECY, TVA protects data by distributing trust and generalizes the functionality of prior systems without compromising security or performance. To mitigate the tension between these two, TVA employs new protocols, cross-layer optimizations, and vectorized primitives that enable it to scale to large datasets with modest use of resources. TVA is the first system to support arbitrary composition of oblivious time window operators, keyed aggregations, and multiple filter predicates, while keeping all data attributes private, including record timestamps and user-defined values in query predicates.

Read our paper for more details.

Self-Managed Stream Processing Systems

From online recommendations to continuous monitoring of large data centers, IoT, and controlling autonomous cars, stream processing today lies in the heart of the modern data analytics stack and has been integrated into the products of large internet companies and Cloud providers. Yet, the complexity and cost of deploying and configuring streaming pipelines hinder the adoption of stream processing technology by scientists and smaller organizations.

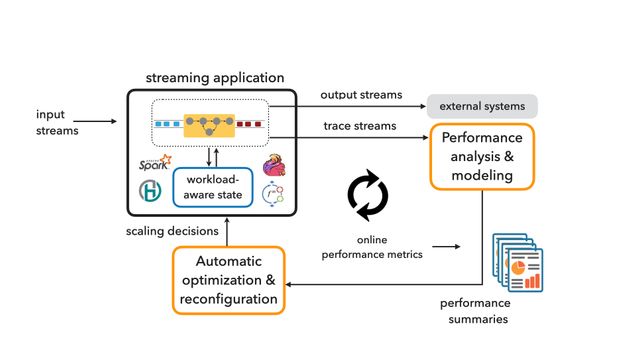

Our research objective is to make the power of streaming analytics accessible to non-expert users and empower them to easily extract knowledge from data streams without managing the software and hardware infrastructure. We envision a future in which a climate scientist can analyze data streams collected by remote drones to study the upper ocean carbon cycle, a physicist can monitor large spatio-temporal streams captured from satellites orbiting the earth in real time, an epidemiologist can effortlessly train and deploy continuously updated disease surveillance models, and a doctor can use an adaptive stream processing pipeline to monitor their patients’ health signals and intervene in a timely manner. To this end, our recent work has focused on developing methods for performance analysis and modeling of streaming dataflow computations (SnailTrail at NSDI’18), addressing the challenge of automatic reconfiguration under workload variance (DS2 at OSDI’18, Megaphone at VLDB’19, The non-expert tax at BiDEDE’22), and exploiting workload characterization to improve the efficiency of stateful dataflow computations (Workload-aware state management at HotSorage’20, Gadget benchmark harness at EuroSys’22).

Scaling Graph ML on Modern Storage

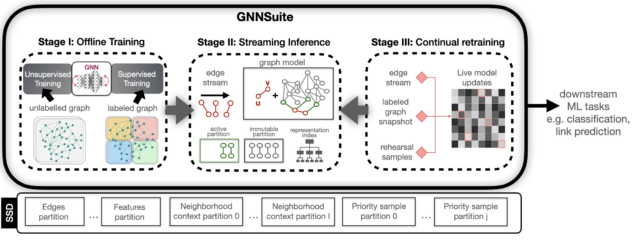

Graph Neural Networks (GNNs) are a powerful model for performing machine learning tasks on graph-structured data. GNNs have demonstrated excellent performance on a variety of prediction and classification scenarios, such as recommendation, fraud detection, search, molecular biology, and code summarization. In these application domains, graphs are often massive, consisting of billions of edges and rich vertex attributes, and may not fit in the main memory of a single machine. Further, real-world graphs are dynamic, thus, models need to be continually updated as the underlying network changes. These characteristics pose a grand challenge to the design of GNN frameworks and require fundamental research on innovative systems for scalable and cost-efficient graph machine learning. Existing GNN systems are resource-intensive, employing distributed computation and requiring large amounts of memory and access to multiple GPUs. As a result, large-scale GNN models are inaccessible to users with modest resources and researchers outside of industrial environments.

We have recently received an NSF award that will allow us to address the requirements of large-scale graph ML workloads by harnessing emerging storage technology. Specifically, we are working on designing and implementing innovative systems for static and dynamic GNNs that target a single commodity machine with SSD storage. Leveraging the large capacity, substantial bandwidth, low access latency, and on-device computational capabilities of modern SSDs, we aim to develop the necessary systems infrastructure to provide a viable and cost-effective alternative to distributed GNN architectures.