How Artificial Intelligence is Beginning to Sense and Perceive

Visual and auditory neuroscience has been used more frequently in the new age of technology. We can see it being used when Facebook automatically identifies our faces in tagged photos or when Siri finally figures out that we want the weather for today and not asking to call your mom. Artificial intelligence is now crossing over onto various different biological sciences, but it’s the scientists and engineers that need to figure out how to transfer all of the information on how natural sounds and visuals get transferred to a computer and how to adapt from that information.

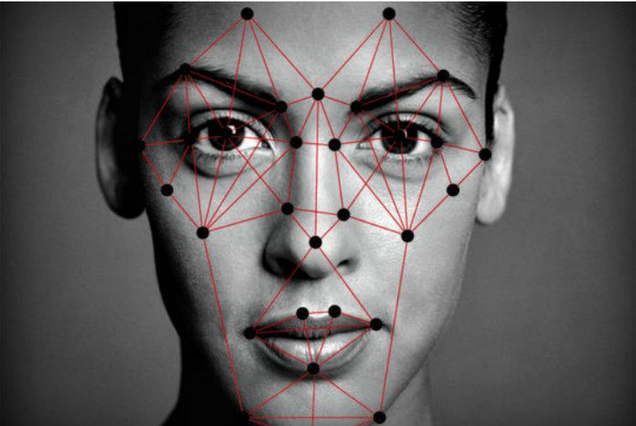

Visual neuroscience’s main focus nowadays is on face processing, or face recognition. The part of the brain responsible for facial recognition is the occipito-temporal region, the right middle fusiform gyrus specifically. Facial recognition is said to involve several stages that range from perceiving basic stimuli to deriving details from said basic stimuli. New research has shown that facial recognition is a very connected brain mechanism that can be taught to those who have experienced a brain injury.

By applying this basic biological science to artificial intelligence, many researchers have been able to further progress the technology surrounding perception – like audial and visual. MIT has recently created a “machine-learning system [that] spontaneously reproduces aspect of human neurology,” meaning that this computational model they have created learns in the same way the brain learns. Apparently this detail wasn’t knowingly built into the system, as it suddenly showed up during the training process. MIT’s Tomaso Poggio developed the system to train itself to recognize certain faces in certain directions. The way they figured out that the system induced another processing step was when a certain face was rotated a certain degree regardless of the direction of the face. Another MIT lab, Computer Science and Artificial Intelligence Laboratory (CSAIL), is attempting to create another machine-learning system that can identify and learn from said identification natural sounds or background noise like crowds cheering or waves crashing. What makes this machine-learning system different from its predecessors is the fact that it does not require hand-annotated data when training, and instead researchers use videos to find the correlations between visuals and sounds. Carl Vondrick, graduate student of the MIT lab, describes it as “the natural synchronization between vision and sound.”

The sciences between artificial intelligence and biology are growing closer and closer together as this era of technology progresses furthermore. It was only a few years ago that facial recognition software became wholly available to the public, and so with further advancement in technology, who knows what one might expect with artificial intelligence.

~Cindy Wu

Sources:

http://neurosciencenews.com/machine-learning-facial-recognition-5654/

http://neurosciencenews.com/video-sound-machine-learning-5665/

https://www.sciencedaily.com/releases/1999/06/990624080203.htm