News

Chang and Qianwan present posters at Sculpted Light in the Brain

Voltage imaging is an evolving tool to continuously image neuronal activities for large number of neurons. Recently, a high-speed low-light two-photon voltage imaging framework was developed, which enabled kilohertz-scanning on population-level neurons in the awake behaving animal. However, with a high frame rate and a large field-of-view (FOV), shot noise dominates pixel-wise measurements and the neuronal signals are difficult to be identified in the single-frame raw measurement. Another issue is that although deep-learning-based methods has exhibited promising results in image denoising, the traditional supervised learning is not applicable to this problem as the lack of ground-truth “clean” (high SNR) measurements. To address these issues, we developed a self-supervised deep learning framework for voltage imaging denoising (DeepVID) without the need for any ground-truth data. Inspired by previous self-supervised algorithms, DeepVID infers the underlying fluorescence signal based on the independent temporal and spatial statistics of the measurement that is attributed to shot noise. DeepVID reduced the frame-to-frame variably of the image and achieved a 15-fold improvement in SNR when comparing denoised and raw image data.

Conventional microscopes are inherently constrained by its space-bandwidth product, which means compromises must be made to obtain either a low spatial resolution or a narrow field-of-view. Computational Miniature Mesoscope (CM2) is a novel fluorescence imaging device that overcomes this bottleneck by jointly designing the optics and algorithm. The CM2 platform achieves single-shot large-scale volumetric imaging with single cell resolution on a compact platform. Here, we demonstrate CM2 V2 – an advanced CM2 system that integrates novel hardware improvements and a new deep learning reconstruction framework. On the hardware side, the platform features a 3D-printed freeform illuminator that achieves ~80% excitation efficiency – a ~3X improvement over our V1 design, and a hybrid emission filter design that improves the measurement contrast by >5X. On the computational side, the new proposed computational pipeline, termed CM2Net, is fueled by simulated realistic field varying data to perform fast and reliable 3D reconstruction. As compared to the model-based deconvolution in our V1 system, CM2Net achieves ~8X better axial localization and ~1400X faster reconstruction speed. The trained CM2Net is validated by imaging phantom objects with embedded fluorescent particles. We experimentally demonstrate the CM2Net offers 6um lateral, and 24um axial resolution in a 7mm FOV and 800um depth range. We anticipate that this simple and low-cost computational miniature imaging system may be applied to a wide range of large-scale 3D fluorescence imaging and wearable in-vivo neural recordings on mice and other small animals.

Dynamic Synthesis Network for Adaptive descattering on Cover of Light

Congratulations to Waleed & Hao on making to the cover of Light: Science & Applications for their work:

Adaptive 3D descattering with a dynamic synthesis network

W Tahir, H Wang, L Tian, Light: Science & Applications 11 (1), 1-21

CM2 project receives NIH R01 from NINDS!

ABSTRACT

Perception and cognition arise from the coordinated activity of large networks of neurons spanning diverse brain areas. Understanding their emergent behavior requires large-scale activity measurements both within and across regions, ideally at single cell resolution. An integrative understanding of brain dynamics requires cellular-scale data across sensory, motor, and executive areas spanning more than a centimeter. In addition, functional interactions between brain areas vary with motivational state and behavioral goals, making data from freely moving animals particularly critical. Thus, a key goal is the ability to measure activity across the full extent of cortex at cellular resolution as animals engage in complex, cognitively demanding behaviors. However, conventional fluorescence microscopy techniques cannot meet the joint requirements of FOV, resolution, and miniaturization. Here, we propose a Computational Miniature Mesoscope (CM2) that will enable cortex-wide, cellular resolution Ca2+ imaging in freely behaving mice. The premise is that computational imaging leverages advanced algorithms to overcome limitations of conventional optics and significantly expand imaging capabilities. In our proof-of-principle system, we demonstrated single-shot 3D imaging across an 8x7mm2 FOV and 7µm resolution in scattering phantoms (Sci. Adv. 2020), and achieved single-cell resolution on histological sections. Our wearable prototype has now demonstrated visualization of sensory-driven neural activity across the 4x4mm2 main olfactory bulb in both head-fixed and freely moving mice. In this project we will: (Aim 1) advance CM2 hardware to achieve cortex-wide cellular resolution imaging. We will validate the hardware improvement on both phantoms and in vivo experiments. (Aim 2) Develop scattering-informed deep learning for fast and robust recovery of neural signals. We will validate the algorithm on in vivo experiments and benchmark against tabletop 1P and 2P measurements. (Aim 3) Cortex-wide, cellular-resolution Ca2+ imaging during social recognition in freely behaving mice. We will use CM2 to investigate the cross-area, network-scale activity dynamics that guide social interactions between familiar partners – one of the most integrative, multi-sensory, and cognitively demanding forms of neural processing. IMPACT ON PUBLIC HEALTH: This work will establish powerful enabling technology that greatly expands the scale of activity measurements possible in behaving animals, providing access to a wide range of questions about distributed cortical function. As a focused application, we will test the neural signatures of individual recognition during social behavior. We anticipate that our approach can be extended to a broader range of biological questions such as navigation, short- and long-term memory storage, and can potentially lead to new strategies for characterizing the disruptions in neural function that occur in psychiatric disease and neurodegenerative disorders.

Yujia defended PhD Dissertation!

Title: Computational Miniature Mesoscope for Large-Scale 3D Fluorescence Imaging

Presenter: Yujia Xue

Date: Thursday, March 31, 2022

Time: 11:00am to 1:00pm

Location: 8 Saint Mary's Street, Room 339

Advisor: Professor Lei Tian, ECE

Chair: Professor Abdoulaye Ndao, ECE

Committee: Professor David A. Boas, BME/ECE; Professor Vivek K. Goyal, ECE; Professor Jerome C. Mertz, BME/ECE.

Abstract:

Fluorescence imaging is indispensable to biology and neuroscience. The need for large-scale imaging in freely behaving animals has further driven the development in miniature microscopes (miniscopes). However, conventional microscopes and miniscopes are inherently constrained by their limited space-bandwidth-product, shallow depth-of-field, and inability to resolve 3D distributed emitters. In this dissertation, I present a Computational Miniature Mesoscope (CM2) leveraged by two computation frameworks that overcomes these bottlenecks and enables single-shot 3D imaging across a wide imaging field-of-view (7~8 mm) and an extended depth-of-field (0.8~2 mm) with a high lateral (7 μm) and axial resolution (25 μm). The CM2 is a novel fluorescence imaging device that achieves large-scale illumination and single-shot 3D imaging on a compact platform. Its expanded imaging capability is enabled by computational imaging that jointly designs optics and algorithms. I present two versions of CM2 platforms and two 3D reconstruction algorithms in the dissertation. In addition, pilot studies of in vivo imaging experiments using a wearable CM2 prototype are conducted to demonstrate the CM2's potential applications in neural imaging.

First, I present the CM2 V1 platform and a model-based 3D reconstruction algorithm which performs volumetric reconstructions from single-shot measurements. The mesoscale 3D imaging capability is validated on various fluorescent samples and analyzed under bulk scattering and background fluorescence in phantom experiments. Next, I present and demonstrate an upgraded CM2 V2 platform augmented with a deep learning-based 3D reconstruction framework, termed CM2Net, to enable fast 3D reconstruction with higher axial resolution.The CM2 V2 design features an array of freeform illuminators and hybrid emission filters to achieve high excitation efficiency and better suppression of background fluorescence.The CM2Net combines ideas from view demixing, lightfield refocusing and view synthesis to achieve reliable 3D reconstruction with high axial resolution. Trained purely on simulated data, I show that the CM2Net can generalize to experimental measurements. The key element of CM2Net's generalizability is a 3D Linear Shift Variant model of CM2 that simulates realistic training data with field varying aberrations. The CM2Net achieves a 10 times better axial resolution at 1400 times faster reconstruction speed compared to the model-based reconstruction.

Built from off-the-shelf and 3D printed components, I envision the low-cost and compact CM2 can be adopted in various biomedical and neuroscience research. The CM2 and the developed computational tools may bring impact to a wide range of large-scale 3D fluorescence imaging applications.

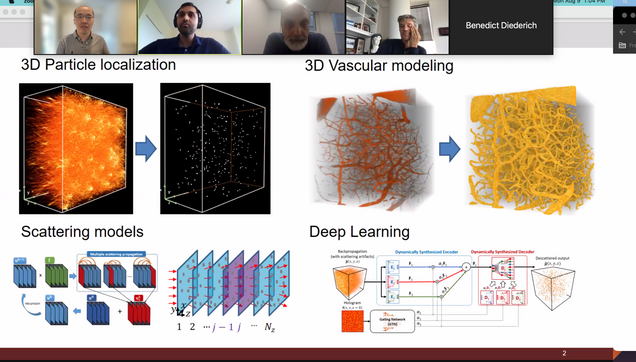

Waleed defended PhD Dissertation!

Congratulations, Waleed!

Title: Deep learning for large-scale holographic 3D particle localization and two-photon angiography segmentation

Presenter: Waleed Tahir

Date: August 9th, 2021

Time: 1:00 PM – 3:00 PM

Advisor: Professor Lei Tian (ECE, BME)

Chair: Professor Hamid Nawab (ECE, BME)

Committee: Professor Vivek Goyal (ECE), Professor David Boas (BME, ECE), Professor Jerome Mertz (BME, ECE, Physics)

Abstract:

Digital inline holography (DIH) is a popular imaging technique, in which an unknown 3D object can be estimated from a single 2D intensity measurement, also known as the hologram. One well known application of DIH is 3D particle localization, which has found numerous use cases in the areas of biological sample characterization and optical measurement. Traditional techniques for DIH rely on linear models of light scattering, that only account for single scattering of light, and completely ignore the multiple scattering among scatterers. This assumption of linear models becomes inaccurate under high particle densities and large refractive index contrasts. Incorporating multiple scattering into the estimation process has shown to improve reconstruction accuracy in numerous imaging modalities, however, existing multiple scattering solvers become computationally prohibitive for large-scale problems comprising of millions of voxels within the scattering volume.

This thesis addresses this limitation by introducing computationally efficient frameworks that are able to effectively account for multiple scattering in the reconstruction process for large-scale 3D data. We demonstrate the effectiveness of the proposed schemes on a DIH setup for 3D particle localization, and show that incorporating multiple scattering significantly improves the localization performance compared to traditional single scattering based approaches. First, we discuss a scheme in which multiple scattering is computed using the iterative Born approximation by dividing the 3D volume into discrete 2D slices, and computing the scattering among them. This method makes it feasible to compute multiple scattering for large volumes, and significantly improves 3D particle localization compared to traditional methods.

One limitation of the aforementioned method, however, was that the multiple scattering computations were unable to converge when the sample under consideration was strongly scattering. This limitation stemmed from the method's dependence on the iterative Born approximation, which assumes the samples to be weakly scattering. This challenge is addressed in our following work, where we incorporate an alternative multiple scattering model that is able to effectively account for strongly scattering samples without irregular convergence properties. We demonstrate the improvement of the proposed method over linear scattering models for 3D particle localization, and statistically show that it is able to accurately model the hologram formation process.

Following this work, we address an outstanding challenge faced by many imaging applications, related to descattering, or removal of scattering artifacts. While deep neural networks (DNNs) have become the state-of-the-art for descattering in many imaging modalities, generally multiple DNNs have to be trained for this purpose if the range of scattering artifact levels is very broad. This is because for optimal descattering performance, it has been shown that each network has to be specialized for a narrow range of scattering artifact levels. We address this challenge by presenting a novel DNN framework that is able to dynamically adapt its network parameters to the level of scattering artifacts at the input, and demonstrate optimal descattering performance without the need of training multiple DNNs. We demonstrate our technique on a DIH setup for 3D particle localization, and show that even when trained on purely simulated data, the networks is able to demosntrate improved localization on both simulated and experimental data, compared to existing methods.

Finally, we consider the problem of 3D segmentation and localization of blood vessels from large-scale two-photon microscopy (2PM) angiograms of the mouse brain. 2PM is a widely adapted imaging modality for 3D neuroimaging. The localization of brain vasculature from 2PM angiograms, and its subsequent mathematical modeling, has broad implications in the fields of disease diagnosis and drug development. Vascular segmentation is generally the first step in the localization process, in which blood vessels are separated from the background. Due to the rapid decay in the 2PM signal quality with increasing imaging depth, the segmentation and localization of blood vessels from 2PM angiograms remains problematic, especially for deep vasculature. In this work, we introduce a high throughput DNN, with a semi-supervised loss function, which not only is able to localize much deeper vasculature compared to existing methods, but also does so with greater accuracy and speed.

Alex defended PhD Dissertation!

Alex Matlock successfully defended his PhD Dissertation. First PhD from Tian Lab! Congratulations!!!

Title: Model and Learning-Based Strategies for Intensity Diffraction Tomography

Presenter: Alex Matlock

Date: June 18, 2021

Time: 1:00PM - 3:00PM

Advisor: Professor Lei Tian (ECE, BME)

Chair: Professor Abdoulaye Ndao (ECE)

Committee: Professor Selim Ünlü (ECE, MSE, BME), Professor Jerome Mertz (BME, ECE, Physics), Professor Ji-Xin Cheng (ECE, BME, MSE)

Abstract:

Intensity Diffraction Tomography (IDT) is a recently developed quantitative phase imaging tool with significant potential for biological imaging applications. This modality captures intensity images from a scattering sample under diverse illumination and reconstructs the object's volumetric permittivity contrast using linear inverse scattering models. IDT requires no through-focus sample scans or exogenous contrast agents for 3D object recovery and can be easily implemented with a standard microscope equipped with an off-the-shelf LED array. These factors make IDT ideal for biological research applications where easily implementable setups providing native sample morphological information are highly desirable. Given this modality's recent development, IDT suffers from a number of limitations preventing its widespread adoption: 1) large measurement datasets with long acquisition times limiting its temporal resolution, 2) model-based constraints preventing the evaluation of multiple-scattering samples, and 3) low axial resolution preventing the recovery of fine axial structures such as organelles and other subcellular structures. These factors limit IDT to primarily thin, static objects, and its unknown accuracy and sensitivity metrics cast doubt on the technology's quantitative recovery of morphological features.

This thesis addresses the limitations of IDT through advancements provided from model and learning-based strategies. The model-based advancements guide new computational illumination strategies for high volume-rate imaging as well as investigate new imaging geometries, while the learning-based enhancements to IDT present an efficient method for recovering multiple-scattering biological specimens. These advancements place IDT in the optimal position of being an easily implementable, computationally efficient phase imaging modality recovering high-resolution volumes of complex, living biological samples in their native state.

We first discuss two illumination strategies for high-speed IDT. The first strategy develops a multiplexed illumination framework based on IDT's linear model enabling hardware-limited 4Hz volume-rate imaging of living biological samples. This implementation is hardware-agnostic, allowing for fast IDT to be added to any existing setup containing programmable illumination hardware. While sacrificing some reconstruction quality, this multiplexed approach recovers high-resolution features in live cell cultures, worms, and embryos highlighting IDT's potential across numerous ranges of biological imaging.

Following this illumination scheme, we discuss a hardware-based solution for live sample imaging using ring-geometry LED arrays. Inspired from the linear model, this hardware modification optimally captures the object's information in each LED illumination allowing for high-quality object volumes to be reconstructed from as few as eight intensity images. This small image requirement allows IDT to achieve camera-limited 10Hz volume rate imaging of live biological samples without motion artifacts. We show the capabilities of this annular illumination IDT setup on live worm samples. This low-cost solution for IDT's speed shows huge implications for enabling any biological imaging lab to easily study the form and function of biological samples of interest in their native state.

Next, we present a learning-based approach to expand IDT to recovering multiple-scattering samples.

IDT's linear model provides efficient computation of an object's 3D volume but fails to recover quantitative information in the presence of highly scattering samples. We introduce a lightweight neural network architecture, trained only on simulated natural image-based objects, that corrects the linear model estimates and improves the recovery of both weakly and strongly scattering samples. This implementation maintains the computational efficiency of IDT while expanding its reconstruction capabilities allowing for more generic imaging of biological samples.

Finally, we discuss an investigation of the IDT modality for reflection mode imaging. IDT traditionally captures only low axial resolution information because it cannot capture the backscattered fields from the object that contain rich information regarding the fine details of the object's axial structures. Here, we investigated whether a reflection-mode IDT implementation was possible for recovering high axial resolution structures from this backscattered light. We develop the model, imaging setup, and rigorously evaluate the reflection case in simulation and experiment to show the possibility for reflection IDT. While this imaging geometry ultimately requires a nonlinear model for 3D imaging, we show the technique provides enhanced sensitivity to the object's structures in a complementary fashion to transmission-based IDT.

Yujia is awarded 2021 SPIE Optics and Photonics Education Scholarship

Congratulations to Yujia for being awarded the 2021 SPIE Optics and Photonics Education Scholarship.

Lei receives the 2021 Early Career Excellence in Research Award in College of Engineering

Prof. Tian is a recipient of the 2021 Early Career Excellence in Research Award from the College of Engineering. https://www.bu.edu/eng/2021/05/28/ece-junior-faculty-recognized-by-boston-university-as-outstanding-researchers/

Hao is selected as a Hariri Graduate Student Fellow, congratulations!

Hao is selected as a Hariri Graduate Student Fellow, congratulations!

Shiyi Cheng won nac Image Technology Best Presentation Award in SPIE Photonics West BIOS

Congratulations to Shiyi Cheng for winning the NAC Image Technology Best Presentation Award in SPIE Photonics West BIOS “High-Speed Biomedical Imaging and Spectroscopy” Conference for his work on