Brain Swarm Interface (BSI).

This work presents a novel marriage of Swarm Robotics and Brain Computer Interface technology to produce an interface which connects a user to a swarm of robots. The proposed interface enables the user to control the swarm’s size and motion employing just thoughts and eye movements. The thoughts and eye movements are recorded as electrical signals from the scalp by an off-the-shelf Electroencephalogram (EEG) headset. Signal processing techniques are used to filter out noise and decode the user’s eye movements from raw signals, while a Hidden Markov Model (HMM) technique is employed to decipher the user’s thoughts from filtered signals. The dynamics of the robots are controlled using a swarm controller based on potential fields. The shape and motion parameters of the potential fields are modulated by the human user through the brain-swarm interface to move the robots. The method is demonstrated experimentally with a human controlling a swarm of three m3pi robots in a laboratory environment, as well as controlling a swarm of 128 robots in a computer simulation.

Brain Computer Interfaces hold great promise for enabling people with various forms of disabilities, from restricted motion due to injury or old age, to severe disabilities like the ALS , Locked-in syndrome, Tetraplegia and paralysis. There have been several works which have investigated using BCIs for controlling prosthetics and for medical rehabilitation.Whereas the motivation for a BCI operated prosthetic or wheelchair is evident, the applications for a brain swarm interface may be less obvious. We envision several applications for this technology. Firstly, people who are mobility impaired may use a swarm of robots to manipulate their environment using a brain-swarm interface. Indeed, a swarm of robots may offer a greater range of possibilites for manipulation than what is afforded by a single mobile robot or manipulator. For example a swarm can reconfigure to suit different sizes or shapes of objects, or to split up and deal with multiple manipulation tasks at once. Another motivation for our work is that using a brain interface may unlock a new, a more flexible, way for people to interact with swarms.

Currently human swarm interfaces are largely restricted to gaming joysticks with limited degrees of freedom. However, swarms typically have many degrees of freedom, and the brain has an enormous potential to influence those degrees of freedom beyond the confines of a traditional joystick. We envision that the brain can eventually craft shapes and sizes for swarm, split a swarm into sub swarms, aggregate or disperse the swarm, and perhaps much more. In this work, we take a small step toward this vision.

The Training Phase

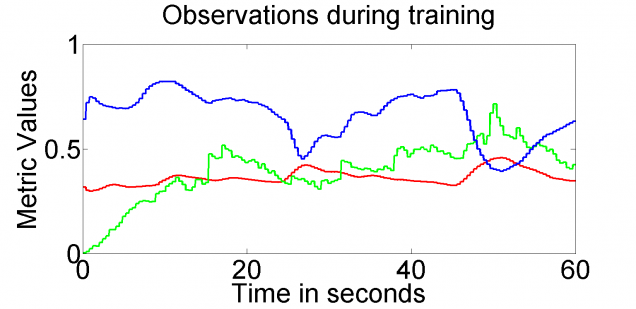

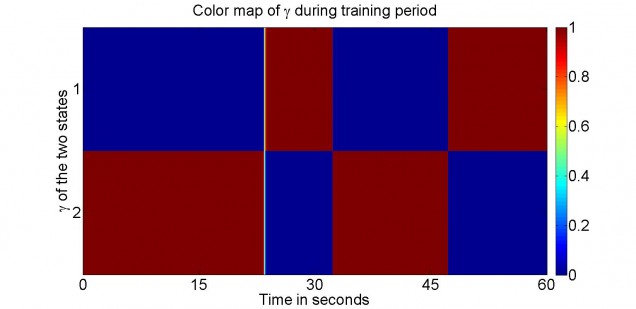

We train the HMM model using the Baum-Welch algorithm to obtain the model parameters from a set of observations. These observations are essentially three of Emotiv’s performance metrics (Engagement,Meditation and Excitation). In this phase the user thinks of two particular thoughts which are quite different from each other (for example relaxing in a beach v/s doing a mental calculation) over a period of 60 seconds while revisiting each thoughts at least once. The observations and the thought estimation over a period of 60 seconds can be seen in the figures below.

Fig. 1 Observation and state estimation during the training phase

The Algorithm has proven to be pretty decisive and accurate, i.e. at all times one state is red (meaning the probability that the user is in that thought state is nearly one), while the other is blue (meaning probability that the user is in that thought state is almost zero).

The Simulation and Experiment Phase

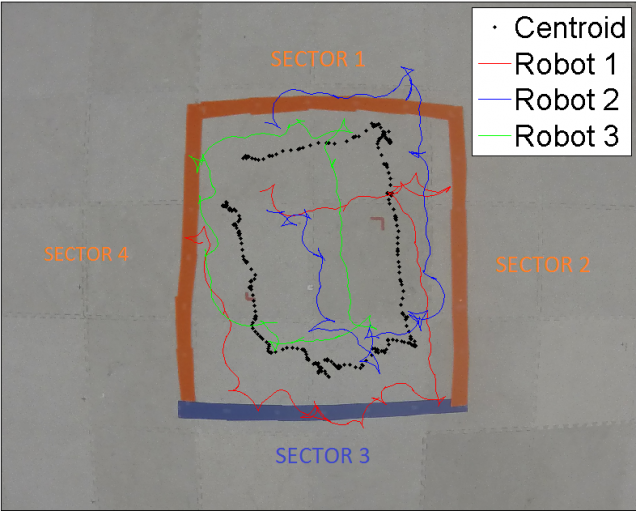

After the training phase we used the model parameters learnt in the ‘Forward Algorithm’ to estimate the current state/thought by the user. The thoughts were used to modulate the size of the swarm (Aggragate and Disperse) and eye movements were used to move the swarm. The four eye movements (i.e. Up,Down,Left and Right) corresponded to the respective movement of the swarm. For both the simulation and experiment we chose a simple rectangular path to navigate as a proof of concept. We labelled the edges of the rectangle as sections 1,2,3 and 4 and navigated in a clockwise manner. In sections 1,2 and 4 the user thinks of the thought which makes the robots disperse and in section 3 (purple path in the simulation and experiments) the robots aggregate corresponding to the other thought by the user.

We simulated the control of 128 robots in a virtual Matlab environment which consisted of a rectangular path which is part of BU campus using real online EEG data. The results are shown below in Fig. 2 where the red line indicates the path of the centroid of the swarm and the blue lines indicate the size of the swarm.

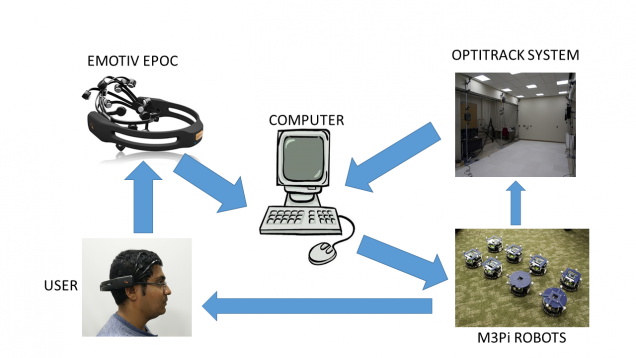

For the experiment we used 3 M3pi robots and used Optitrack system for Localisation and feedback. We used a point offset control method for driving individual robots and potential field approach to drive the swarm. Fig 3 shows the control flow during the experiement. Fig 4 shows the path traveled by the individual robots during the experiment.

The video of the simulation and experiment is shown in the bottom of the page for more clarifications.

Fig. 2 Shows the path of the centroid of the swarm and its size during the course of the simulation.

Fig. 3 Control flow during the experiment.

Fig. 4 Path travelled by the individual robots and the swarm centroid during the experiment.