Performance Comparison of Crowdworkers and NLP Tools on Named-Entity Recognition and Sentiment Analysis of Political Tweets

Abstract

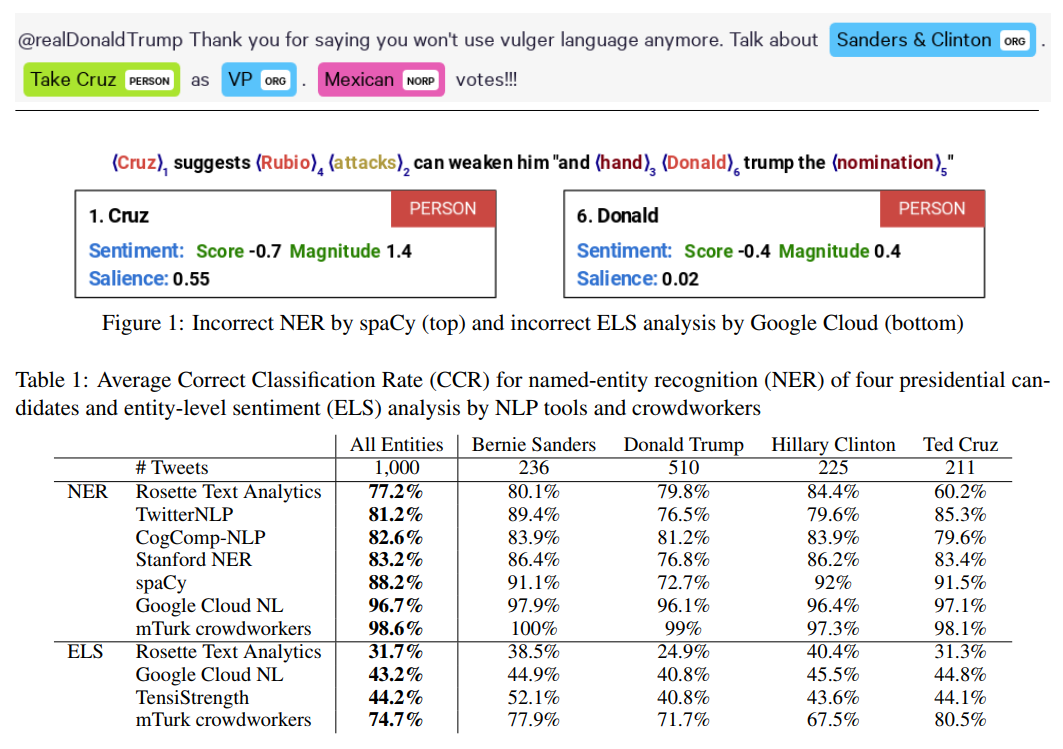

We report results of a comparison of the accuracy of crowdworkers and seven Natural Language Processing (NLP) toolkits in solving two important NLP tasks, named-entity recognition (NER) and entity-level sentiment (ELS) analysis. We here focus on a challenging dataset, 1,000 political tweets that were collected during the U.S. presidential primary election in February 2016. Each tweet refers to at least one of four presidential candidates, i.e., four named entities. The groundtruth, established by experts in political communication, has entity-level sentiment information for each candidate mentioned in the tweet. We tested several commercial and open source tools. Our experiments show that, for our dataset of political tweets, the most accurate NER system, Google Cloud NL, performed almost on par with crowdworkers, but the most accurate ELS analysis system, TensiStrength, did not match the accuracy of crowdworkers by a large margin of more than 30 percent points.

Here is a link to the paper.

BibTex of this paper:

author = {Mona Jalal and Kate Mays and Guo and Margrit Betke},

title = {Performance Comparison of Crowdworkers and {NLP} Tools on Named-Entity Recognition and Sentiment Analysis of Political {T}weets},

booktitle={Proceedings of the 17th Annual Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, WiNLP Workshop, New Orleans, Louisiana USA. To be presented on June 1},

year = 2018,

timestamp = {Thu, 10 May 2018 16:40:53 +0200},

}